“The simple is complex”: Professor Giora Hon starts the c:o/re 2023 lecture series on Complexity

On April 12, 2023, the 5th c:o/re lecture series started off. After c:o/re director Gabriele Gramelsberger welcomed attendees and introduced the speaker, Professor Giora Hon delivered his talk, From Reciprocity of Formulation to Symbolic Language: A Source of Complexity in Scientific Knowledge

Professor Hon introduced his notion of epistemic complexity, which refers to what may be considered simple, rather than complex. The talk was directed at clarifying the oxymoron “the simple is complex”. Professor Hon does so in light of three developments in the history of physics:

- the analogy between heat and electricity, following William Thomson

- the reciprocity of text and symbolic formulation, following James C. Maxwell

- liberating symbolic formulation from theory, following Heinrich Hertz

Professor Hon argued that, following this trajectory of ideas in history of physics, in Hertz the complete separation of formal theory and equations can be observed.

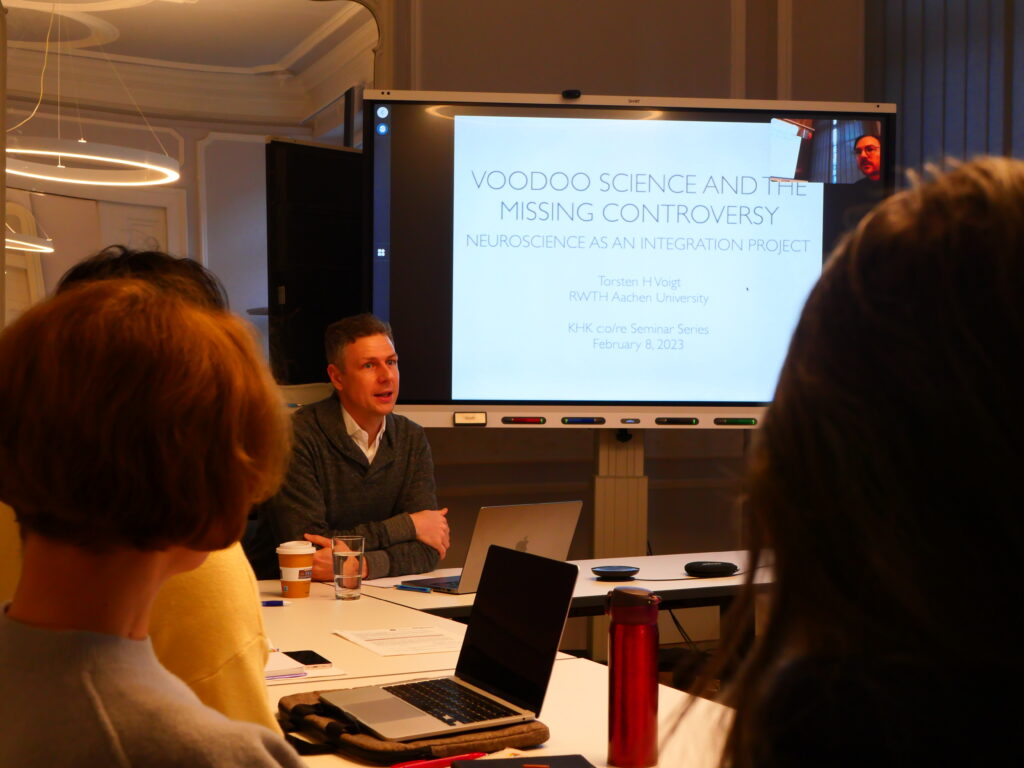

Torsten H. Voigt on voodoo science, dead salmons and the Human Brain

On February 7, Professor Torsten H. Voigt, the Dean of the Faculty of Arts and Humanities at RWTH Aachen University, delivered a talk in the c:o/re lecture series on what has been referred to as “Voodoo science” . Professor Voigt argued that, as in science and philosophy in general, controversy functions as a driver for advancement and innovation in neuroscience. However, he explained, neuroscience as a discipline and community exhibits instrumental rationality in managing and avoiding meaningful controversy. This led to what may be termed an eclipse of reason, damaging or even destroying progress in the scientific field.

Neuroscience is enjoying great popularity, both within academia and in pop culture. and popular science. During the 1980s neuroscience cognitive became the new science of mind, by having incorporated molecular biology. This resulted in a study on a molecular level of how we think, feel and learn. Seen in this way, advertising of consumer goods, for example, reflects a connection established in pop culture between human capacities for creativity and the brain organ. These construals are not only employed in somewhat amusing ways in advertising, but they point to an unjustified optimism in academia. One reason for which this may be allowed, but which also raises suspicions about neuroscientific methods, might have to do with the very low reproducibility herein.

An important example that illustrates this type of process leading to an eclipse of reason, as Voigt argues, is observed in how the otherwise noted study by Vul et al. (2009) was largely ignored in neuroscientific research. The reluctance regarding this study was arguably met with from the start is suggested by the respective journal’s editorial board recommnending removing the expression “voodoo correlatons” from the title of the paper, as initially proposed by the authors.

Vul et al. (2009) observe mysteriously high correlations are claimed in neuroscientific research. This is explained by the fact that many experiments looked at a specific brain region instead of the whole brain as such in relation to behaviour. Despite drawing attention, the study has been ignored (low number of citations) by the community of neuroscientific researchers.

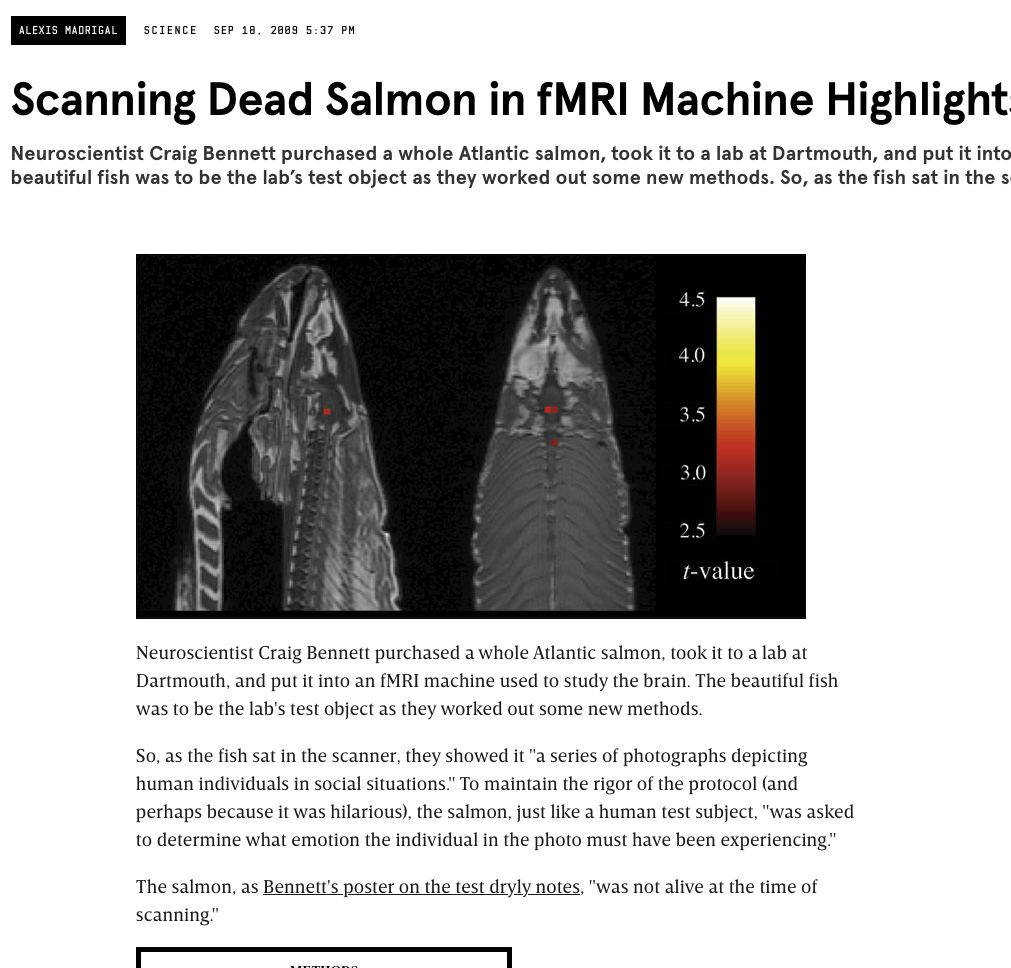

A prototype for signaling controversial matters in neuroscience, setting the tone of doing so in a controversial manner, is the famous “dead salmon” paper by Bennett et al. (2009), who ironically criticised neuroscientific methods by claiming correlations by observing supposed neural activity through fMRI in a dead salmon. The salmon, which “was not alive at the time of scanning”, “was shown a series of photographs depicting individuals in social situations with a specified emotional valence.” As neural activity could arguably be noticed in the image resulting from the scanning, the authors ironically claimed neural correlates of interspecies perspective taking”.

Another example that displays the eclipse of reason tendencies in neuroscience regards the Human Brain project, massively funded by the European Union. Arguably, the Human Brain project is not so much about the brain, as much as it turned out to be an IT infrastructure development project (Nature 2015). With such an example in mind, Voigt construes neuroscience, broadly, as an integration project

References

Vul, E., Harris, C., Winkielman, P., Pashler, H. 2009. Puzzlingly High Correlations in fMRI Studies of Emotion, Personality, and Social Cognition. Perspect. Pychol. Sci. 4(3):274-90.

Rethinking the brain. 2015. Nature 519, 389. https://doi.org/10.1038/519389a

Bennett, C.M., Miller, M.B., Wolford, G.L. 2009. Neural correlates of interspecies perspective taking in the post-mortem Atlantic Salmon: an argument for multiple comparisons correction. NeuroImage, 47, S125.

Varieties of science, 1: Patterns of knowledge

Technology“, at UNAM

On December 5th and 6th the first Varieties of Science workshop, titled Patterns of Knowledge, took place at the National Autonomous University of Mexico (UNAM). Varieties of Science is a series of workshops, organized by c:o/re, that aims to explores the pluralities of knowledge production. The workshop Patterns of knowledge brought together scholars from c:o/re, the hosting university in Mexico City as well as the Science, Technology and Society Studies Centre and the Digital Aesthetics Research Center of Aarhus University. We would like to thank Miriam Peña and Francisco Barrón of UNAM for hosting this. The event was live streamed on the YouTube channel of the Seminario Tecnologías Filosóficas, where it can be watched. We will soon post a full report of this event.

Fernando Pasquini’s proposal for an ergonomics of data science practices

On December 14, 2022, c:o/re fellow Fernando Pasquini Santos explained his proposal Towards an ergonomics of data science practices, as part of the 2022/2023 c:o/re lecture series. While he acknowledged that the term is arguably outdated, Pasquini developed a broadly encompassing notion of ergonomics, spanning across modalities and modes of human-computer interaction. In this endeavour, Pasquini started by asking “how does it feel to work with data?” Tackling the question, he distinguished between challenges and directions in technology usability assessments.

In what was a rich and broadly encompassing study, Pasquini found particular inspiration in Coeckelbergh (2019), who notices a tradition in philosophy of technology that equates skilful acting with having a good life.

In this light, Pasquini proposed a “critical mediality” perspective in data science, that covers considerations from abstraction in data work to mathematical constructivism, embodiment and to blackboxing.

References

Coeckelbergh, Mark. 2019. Technology as Skill and Actvity:Revisitng the Problem of Alienation. Techné: Research in Philosophy and Technology 16(3): 208–230.

Joost-Pieter Katoen demystifies probabilistic modeling

On July 13th, Professor Joost-Pieter Katoen (RWTH Aachen University) gave the final Philosophy of AI lecture: Optimistic and Pessimistic Views lecture at c:o/re, titled “Demystifying probabilistic programming“. The talk convincingly advocated the usefulness and accuracy of probabilistic inferences as performed by computers. Various types of machine learning, argued Joost-Pieter Katoen, can benefit from being developed through probabilistic programming. The underling claim is that probabilistic programs are a universal modeling formalism. Far from implying that this could result in softwares that could successfully replace humans from inferential and decision-making processes, probabilistic programming relies on correct parameterisation, which is an input provided by humans.

The c:o/re team would like to thank Professor Frederik Stjernfelt and Dr. Markus Pantsar for organizing the lecture series Philosophy of AI: Optimistic and Pessimistic Views, which ran throughout the summer semester of 2022.

References

probabilistic inference

Training of neural networks

References

Ghahramani, Zoubin. 2015. Probabilistic machine learning and artificial intelligence. Nature 521: 452–459.

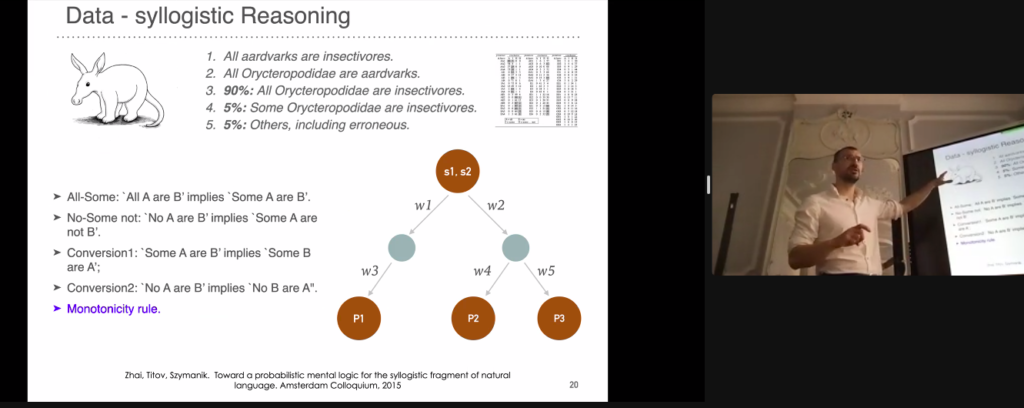

The language of thought: still a salient issue

Part of the Philosophy of AI: Optimistic and Pessimistic View lecture series, on 25.05.2022 Jakub Szymanik gave a lecture at c:o/re on Reverse–engineering the language of thought, problematising thought and computation by exploring the cognitive scientific notion of language of thought (“mentalese”). This concept, positing that humans think through logical predicates combined through logical operators, originates in Jerry A. Fodor’s celebrated book (1975), explicitly titled Language of thought. In effort to reverse-engineer the language of thought, Jakub Szymanik considered some recent computation theories, such as inspired from Jerome Feldman and neural networks in a fresh manner.

Jakub Szymanik explained that the notion of language of thought is not easy to avoid: it is often the engine underpinning theories of cognitive models, via notions of complexity and simplicity. The investigation leads to a broad variety of theoretical implications and empirical insights, among which there can be many contradictions. However, apparently divergent approaches at play here, such as, for example, symbolism and enactivism are not necessarily and entirely irreconcilable. The way forward is through pragmatically pursuing the epistemological unification of such theories, as guided by empirical insight.

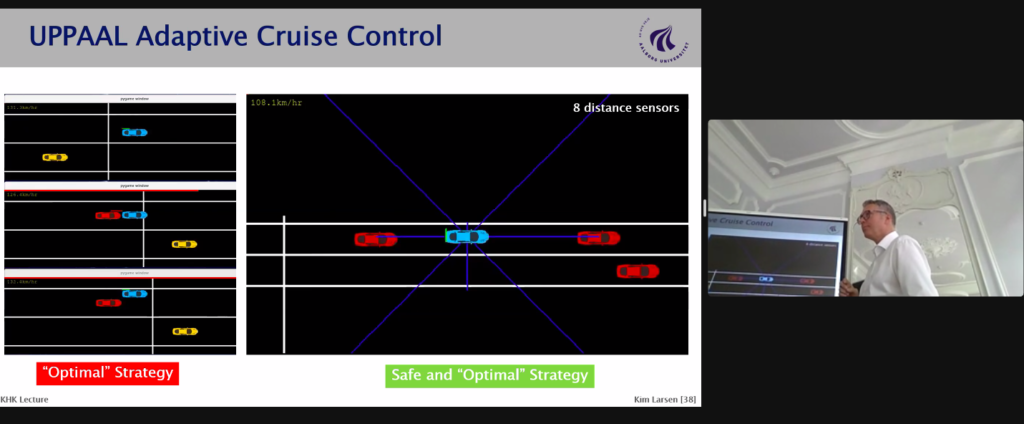

Are we there yet, are we there yet?

At his talk, part of the Philosophy of AI: Optimistic and Pessimistic Views, Professor Kim Guldstrand Larsen reflected on how far (or near) are we from developing fully autonomous cars. This is a priority challenge for explainable and verifiable machine learning. The question is not easy to answer directly. One certainty, though, is that the answer lies in the cooperation, or lack thereof, between academia and political agents (municipalities). The mediating agent, which, none of these two seem to favour, stems from industry: what can commercial companies deliver to improve traffic? Companies seem to speak both the language of research and of politics. How much will we smartify traffic in the next, say, 10 years? The question translates, as Professor Ana Bazzan asked simply, “What will companies do”?

What companies do, in this regard, will impact not only policy but also academia. Success in delivering smart solutions for traffic is expected to guide curriculum development in computer science programs. For example, the commerical solutions will focus teaching on either neural networks, Bayesian networks or automata based models.

Digital justice for all… and letters: Jean Lassègue on Space, Literacy and Citizenship

Part of the c:or/e Philosophy of AI: Optimis and Pessimist Views, Jean Lassègue’s talk showed that (digital) literacy is intrinsic to digital justice. His minute comparison of the modern notion of justice and what digital justice may be suggests that, aside many compatibilities and ways in which digital technology can help juridic processes, there is one point of divergence. Namely, this is the despatialization implied by digitalization. In appearance, digital media takes human societies onto despatialized virtual media. However, through an encompassing and thoughtful historical investigation, Jean Lassègue traces the long (cultural) process of despatialization all the way to the emergence of the alphabet as a dominating means of social representation in the West. The alphabet is the beginning of the social practice of “scanning”, eventually fostering computation. In light of this long historical process, questions on digital justice invite the problematization of digital literacy, spatialization and, we would add, embodiment.

“… should be about the people” (Ana Bazzan)

As part of the Philosophy of AI: Optimist and Pessimist Views c:o/re lecture series, Ana Bazzan delivered a very rich talk on “Traffic as a Socio-Technical System: Opportunities for AI“. It is beyond the scope of this entry to cover all arguments advanced in this talk. Here, we reflect on one matter that we find particularly interesting and inspiring. Namely, two interrelated central ideas in this talk are human-centredness in engineering and the network structuring of human societies. Ana Bazzan’s work contributes to understanding newly emerging social networks, in their many dimensions, and to usher the smart city by engineering and designing traffic as a socio-technical system.

Ana Bazzan highlighted the importance of mobility for equality, in many senses of these two words. Simplifying, a transportation system that facilitates mobility is congruent with democratic and transparent institutions. This realization comes from a user experience manner of thinking or, more broadly, placing the human at the centre of engineering. Stating that traffic and, in general, engineering “should be about the people”, Ana Bazzan further asked: “How to mitigate traffic problems by means of human-centered modeling, simulation, and control?” This question echoes the rationale of the environmental humanities, as posited by Sverker Sörlin (2012: 788), that “We cannot dream of sustainability unless we start to pay more attention to the human agents of the planetary pressure that environmental experts are masters at measuring but that they seem unable to prevent.” It is highly insightful that many sciences find ways for progress by reflecting on human matters. It may pass unnoticed, as a detail, but very different problematizations stem from thinking of either technical or socio-technical solutions for, say, traffic. The endeavour of engineering solutions in awareness of social and cultural context is typical of “the creative economy”, being ripe with a creative conflict, as emerging from “where culture clashes most noisily with economics.” (Hartley 2015, p. 80)

In this interrogation, Ana Bazzan referred to Wellington’s argument that we are now in a century of cities, as opposed to the last century, which was a century of nation states. Indeed, research from various angles shows that digitalization transcends the borders, as imagined through print (Anderson 2006 [1983]), of nation-states. In a similar vein, defining industrial revolutions as the merging of energy resources with communication systems, Jeremy Rifkin (2011) argues that to achieve sustainability, it is necessary to merge renewable energy grids with digital communication networks. This would result in overcoming the dependence on the merger of motorways, powered by fossil fuels, and broadcasting.

Particularly through reinforced learning, digital technology and AI are the instruments of the transition towards smart cities. Smart cities do not merely span over a geographical territory but are better identified as (social) networks. They are incarnate in traffic. Ana Bazzan also insists that broadcasting the same information about traffic to all participants to traffic is not useful. Drivers exhibit a rational behavior, according to pragmatic purposes. More than simply targeting pragmatically useful information to specific drivers, a smart traffic system or, better, a smart city is constituted by multiagent systems, not a centralized and unidirectional top-down transmission of information. As such, while not broadcasting uniformly, this approach is, actually, anti-individualistic. It makes evident the benefits, particularly, the cumulative rewards, of seeking solutions in light of people’s shared and concrete necessities. To apprehend these networks and serve the needs of their actants, Ana Bazzan advocates a decentralized, bottom-up approach. Indeed, this is a characteristic of ‘network thinking’ (Hartley 2015). The resulting networks render obsolete previously imagined community boundaries, revealing, instead, the real problems of people as they find themselves in socioeconomic contexts. The city is these networks and it becomes according to how they are engineered.

References

Anderson, Benedict. 2006 [1983]. Imagined communities: Reflections on the origin and spread of nationalism. London: Verso.

Hartley, John. Urban semiosis: Creative industries and the clash of systems.

Rifkin, Jeremy. 2011. The Third Industrial Revolution: How lateral power is transforming energy, the economy, and the world. New York: Pallgrave Macmillan.

Sörlin, Sverker. 2012. Environmental humanities: why should biologists interested in the environment take the humanities seriously? BioScience 62(9): 788-789.

Technologically enlightened: Interdisciplinary research in robotics or the privilege of being messy

Today’s c:o/re workshop on Interdisciplinary Research in Robotics and AI, organized by Joffrey Becker, insightfully showcased the mutual relevance between scientific and market research, particularly in the concern of design. It convincingly posited that materiality and physical properties are intrinsic to learning. The talks by Samuel Bianchini, Hugo Scurto and Elena Tosi Brandi showed that learning is a matter of designing. We do not make stuff out of nothing. Elena Tosi Brandi explained that “when you design behaviors, you have to put an object in an environment, a context.” Humans appear to notice this by interacting with robots. For example, machine learning processes offer opportunities for humans to reflect on their own learning. Animated (digital) objects acting independently from humans and thus, arguably, having agency, in so many words, make us wonder. Observing relations between software, bodies and non-organic matter places humans in a new position to understand how materiality is intrinsic to knowledge. Director of c:o/re, Stefan Böschen noted that experimental research on robotics is often open-ended, aiming to stimulate innovation in a “what may be” interrogation. To this, Hugo Scurto shared that for him “it is a privilege to be able to do research in a messy way”

The consideration of the epistemic qualities of material properties urges the reconsideration of Western modern philosophy, which we shall dive into in tomorrow’s workshop, Enlightenment Now, hosted by Steve Fuller and Frederik Stjernfelt. As Steve Fuller already remarked, listening to Elena Tosi Brandi’s work on design, it is possible “that machines and humans might both improve their autonomy through increased interaction. But this will depend on both machines and humans being able to learn in a sufficiently ‘free’ way, regardless of what that means.” If Hume woke up Kant from the dogmatic slumber, it seems that robots can wake us up some more.