Invitation to talk: From print capitalism to surveillance capitalism: Mapping the Sociotechnical Imaginaries of Platform Surveillance in Japan

As part of the collaboration of c:o/re with Ritsumeikan Asia Pacific University, Peter Mantello and Alin Olteanu will give a talk entitled “From print capitalism to surveillance capitalism: Mapping the Sociotechnical Imaginaries of Platform Surveillance in Japan” on 6 June 2024 from 1 to 3pm at the National University of Science and Technology in Bucharest, which will shed light on the interaction of technology, AI, philosophy and ethics.

The talk will be broadcasted live on Microsoft teams. Click here to attend online.

Abstract:

We argue that AI surveillance effects the transition from print capitalism to surveillance capitalism. We study this process by looking at konbini surveillance, the role of convenience stores in Japan to act as ‘middleman’ for AI platforms by collecting clients’ biometric data. Through this study, we also ponder on the general concept of technology, and argue that situated cognition theories should construe technology as the mind’s outworking itself into its next state. As such, we contribute to uprooting (post-)Cartesian Reason from philosophy of technology (Clowes 2019). The latter lead to conceptualizing technology as adjacent (see Walter, Stephan 2022), instead of intrinsic to mind, despite historically confusing the print medium with reasoning (Hartley 2012).

Print capitalism (Anderson, 1983 [2006]) refers to the literary marketplace that emerged because of the effects of printing technology to enable mass literacy through public education. In the specific circumstances of modernity, literate publics began to perceive themselves as nations: readers of printed press imagined themselves as monolingual communities that require self-governance.

Surveillance capitalism (Zuboff 2019) refers to the data collecting intrinsic to digital tech corporations, based on claiming human experience as material for translation into machine computable data. It contradicts aspirations of digital democracy. Predicting and shaping behavior, surveillance capitalism leads to behavioral futures marketplaces, exploiting digital connection as a means towards commercial ends.

We see surveillance capitalism as the fulfilment of the requirement of print capitalism to imagine closed communities, obstructing the emergence of a sense of kinship on a global level, or “biosphere consciousness” (Rifkin 2011). As digital mediascapes do not afford imagining nations, surveillance capitalism is an ideological attempt to maintain nation-states as concretized into digital datasets. A community as a dataset is something that (post)digital citizens can imagine.

We illustrate our theory by considering current manifestations of Japanese techno-nationalism as an imagined space that transcends normative understandings of ‘nation’. With Robertson (2022), we consider that Japanese techno-nationalism serves as a model for ushering digital nations, reinforcing imaginaries of nationhood through “kinship technologies” that obstruct the expansion of human empathy beyond previously imagined boundaries. We conceive Japanese techno-nationalism as a computational and algorithmic space tethered to larger digital infrastructures, i.e. platform capitalism(Murakami Wood, Monahan 2019).

If print media (newspapers) are historically responsible for modern understandings of nation, then AI surveillance plays a critical role in writing the socio-technical imaginary of Japanese techno-nationalism. To reflect on this, with a focus on the convenience store (konbini), we employ Lefebvre’s (1991 [1974]) concept of space. Like an increasing number of digitalized social spaces (workplace, transport, entertainment, hospitals, prisons), the konbini as a surveillant exchange disciplines and monetizes (mal)practices of consumption.

References

Anderson, B. 2006/1983. Imagined communities: Reflections on the origin and spread of nationalism. London: Verso.

Clowes, R. 2019. Immaterial engagement: human agency and the affective ecology of the internet. Phenomenology and the Cognitive Sciences 18, 259-279.

Hartley, J. 2012. Digital futures for cultural and media studies. Chichester: Wiley-Blackwell.

Murakami Wood, D., Monahan, T. 2019. Editorial: Platform Surveillance. Surveillance & Society 17(1/2): 1-6.

Lefebvre, H. 1991 [1974]. The production of space. Trans. Nicholson-Smith, D. Oxford: Blakcwell.

Rifkin, J. 2011. The third industrial revolution: How lateral power is transforming energy, the economy, and the world. New York: Palgrave Macmillan.

Robertson, J. 2022. Imagineerism: Technology, Robots, Kinship. Perspectives from Japan. In: Bruun, M. H., Wahlberg, A., Douglas-Jones, R., Hasse, C., Hoeyer, K. Kristensen D. B., Winthereik, B. R. Eds. The Palgrave Handbook of Anthropology of Technology. Singapore: Palgrave Macmilan.

Walter, S., Stephan, A. 2022. Situated affectivity and mind shaping: Lessons from social psychology. Emotion Review 15(1): 3-16.

Zuboff, S. 2019. The age of surveillance capitalism: The fight for a human future at the new frontier of power. New York: Public Affairs Books.

Get to know our Fellows: Sarah R. Davies

Get to know our current fellows and gain an impression of their research.

In a new series of short videos, we asked them to introduce themselves, talk about their work at c:o/re, the impact of their research on society and give book recommendations.

You can now watch the latest video on our YouTube channel, in which Sarah R. Davies speaks about her research on data practices in the biosciences and explains her interest in working conditions in academia:

Check out our media section or our YouTube channel to have a look at the other videos.

c:o/re Movie Nights

We are looking forward to the collaboration with the film studio of RWTH Aachen University! As part of the lecture series of the Käte Hamburger Kolleg: Cultures of Research (c:o/re) in the summer semester “Lifelikeness” we will show two movies:

May 29: Her by Spike Jonze (2013), at 8:15 pm. As an introduction before the film, philosopher Ben Woodard (ICI Berlin) will give a lecture entitled “Ideal Locale – her and the envelope function of idealist predication”. The lecture will take place on May 29 at 3 pm at Theaterstraße 75. Please send us an email if you would like to come to the lecture: events@khk.rwth-aachen.de

June 11:. I’m a Cyborg, But That’s OK by Park Chan-wook. The film will be preceded by a short introduction and followed by a discussion moderated by the Käte Hamburger Kolleg: Cultures of Research.

By “Lifelikeness” we mean the representation and/or imitation of living beings in science and technology in fields such as robotics, synthetic biology or AI and neuromorphic computing. We ask how their increasing complexity mimics not only a fixed notion of life, but also the understanding of “life” as such.

Further information on the lecture series can be found on this page.

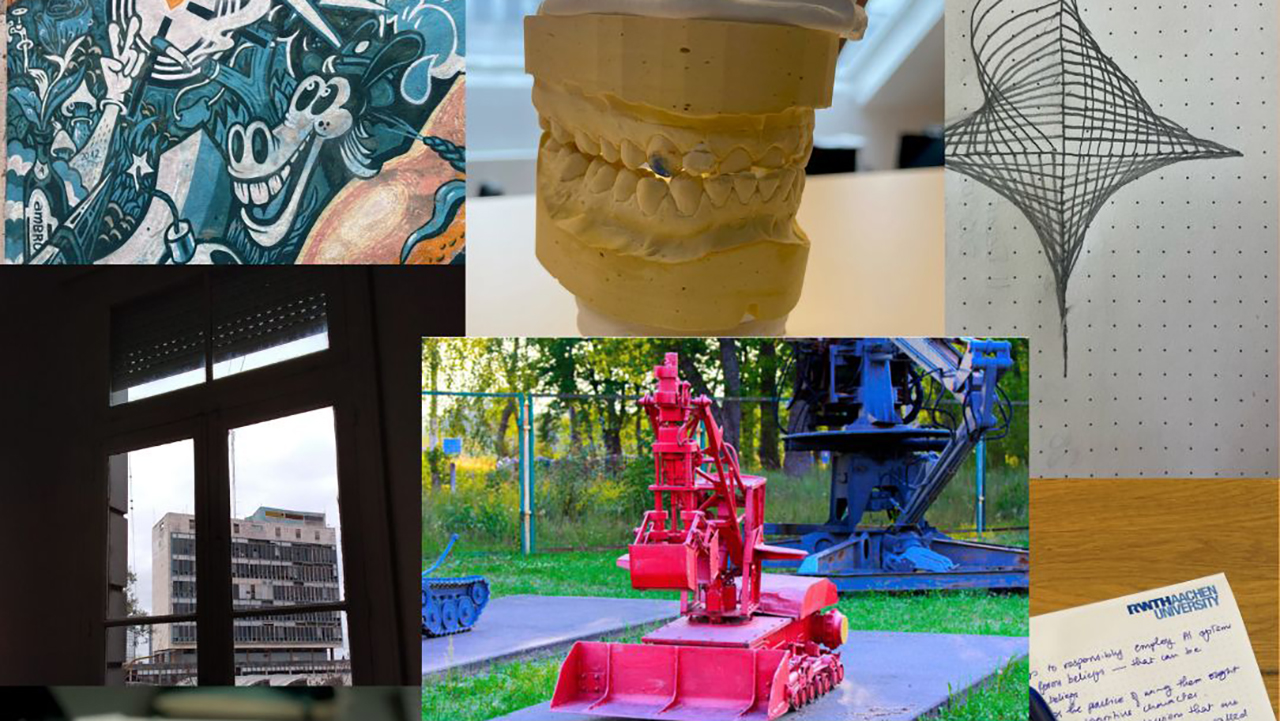

Discover “Objects of Research”

Being in the third year of our Fellowship Program, c:o/re is accumulating a remarkable variety of perspectives revolving around its main focus, research on research.

Questions tackled in this lively research environment are highly interesting and exciting and, as such, complex. The meeting of distinct research cultures may stir curiosity but may also leave one wondering what is the other even talking about… What are they studying?

To offer an insightful glimpse into the lively dialogues here, bridging and reflecting on diverse academic cultures, we have started the blog series “Objects of Research”.

We asked current and former c:o/re fellows and academic staff to show us an object that is most relevant to their research in order to understand how they think about their work.

In 12 contributions, we were able to witness the personal connections researchers have to objects that shape their work. We now invite you to visit the individual contributions and explore the world of research once again.

“For the past two decades, I have had a leading role in developing the neuronal network simulator NEST. This high-quality research software can improve research culture by providing a foundation for reliable, reproducible and FAIRly sharable research in computational neuroscience. Together with colleagues, I work hard to establish “nest::simulated()” as a mark of quality for research results in the field. Collaboration in the NEST community is essential to this effort, and many great ideas have come up while sharing a cup of coffee.“

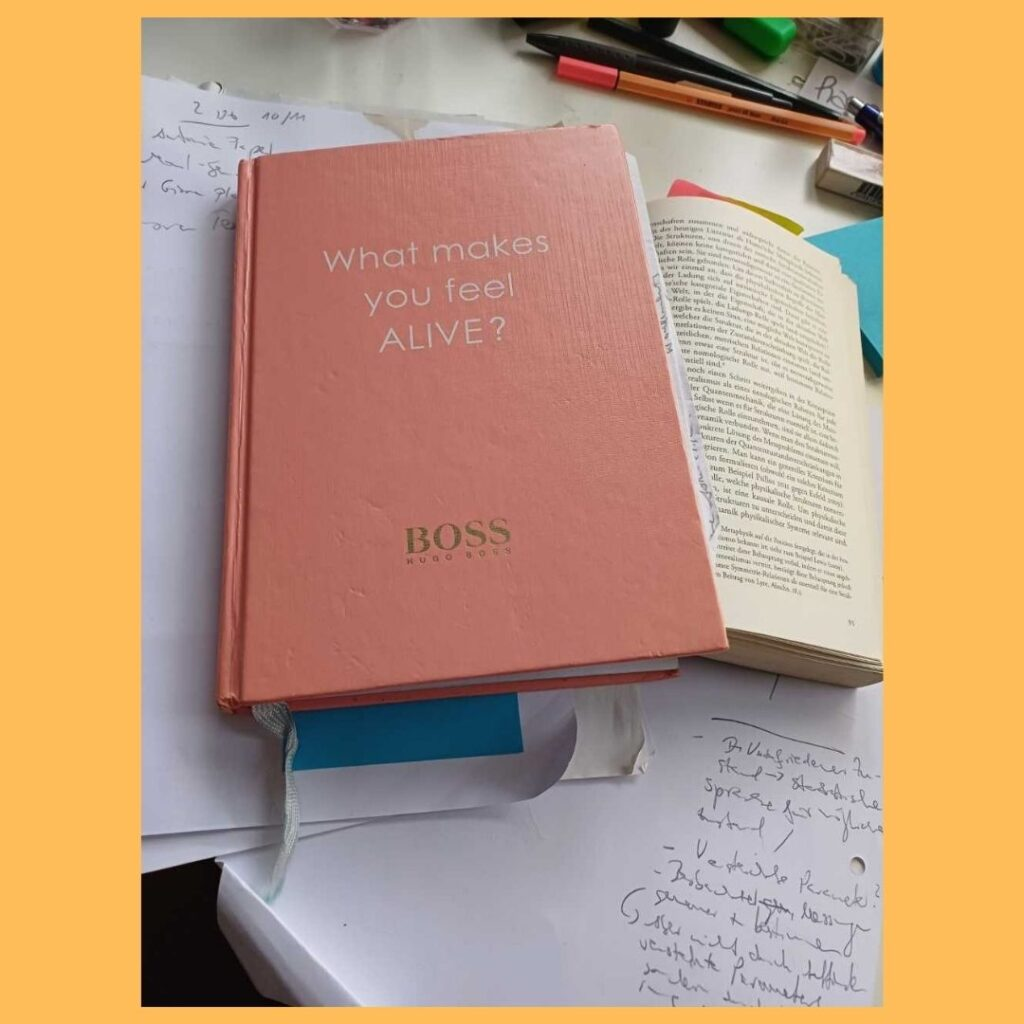

“This is a notebook my Mom gave me. She had it as a kind of leftover from a shopping tour and she thought that it might be of use for my work. And of course, she was right. And as you know, research always starts with a good question that attracts attention.”

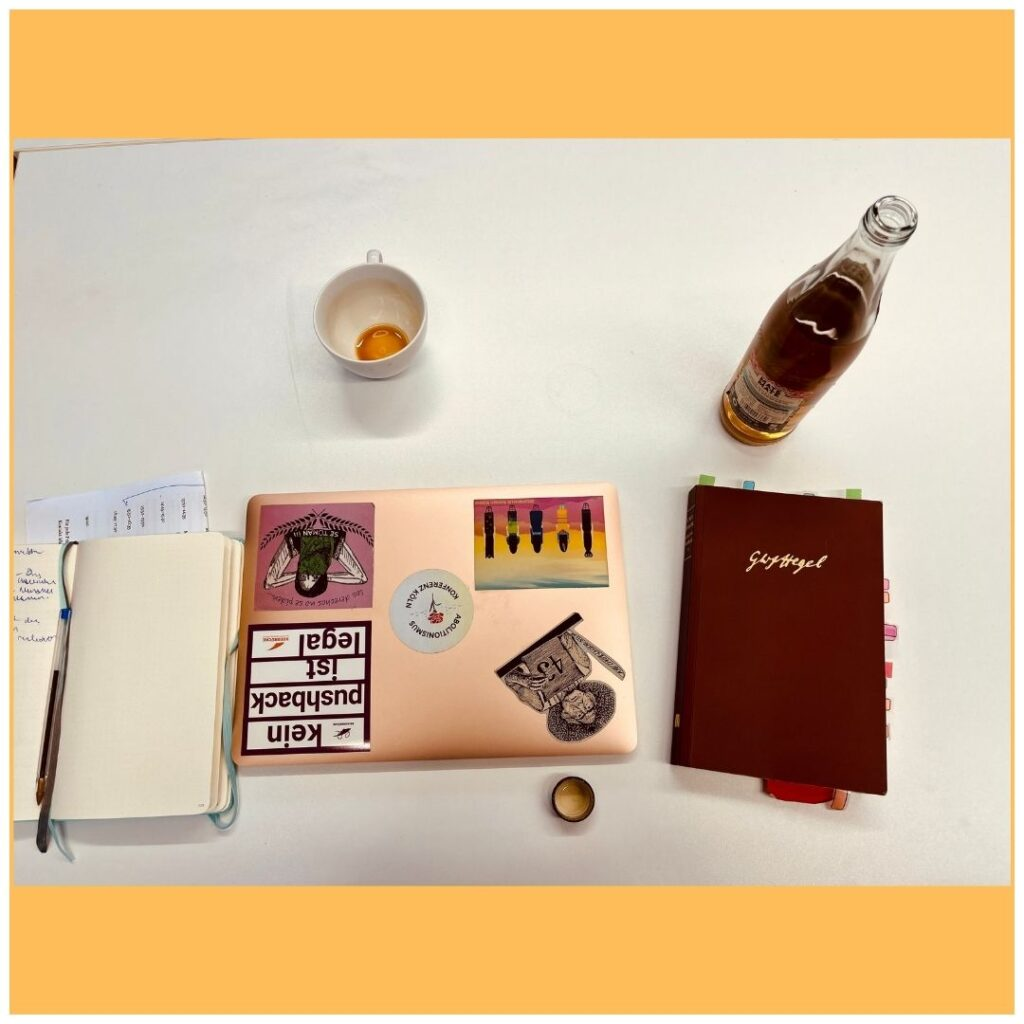

“I guess many academics would share some varient of this image: a careful arrangement of computer equipment, coffee, notepads, pens, and the other detritus that lives on (my) desk.

For me it’s important that the technical equipment is shown in conjunction with the paper notebook and pens. I’m fussy about all of these things – it’s distracting when my computer set-up isn’t what I’m used to, and I need to use very specific pens from a particular store – but ultimately my thinking lives in the interactions between them.

My colleagues and I are working on an autoethnographic study of knowledge production, and notice that (our) creative research work often emerges as we move notes and ideas from paper to computer (and back again).”

“I use mechanical pencils (like the one in the photo) to highlight, annotate, question, clarify, or reference things I read in books. This helps me digest the arguments, ideas, and discourses I deal with in my historical and sociological research. I also have software for annotating and organizing PDFs on my iPad as well as a proper notebook for excerpting and writing down ideas. However, I’ve found that the best way for me to connect my reading practices with my thoughts is through the corporeal employment of a pencil on the physical pages of a book.”

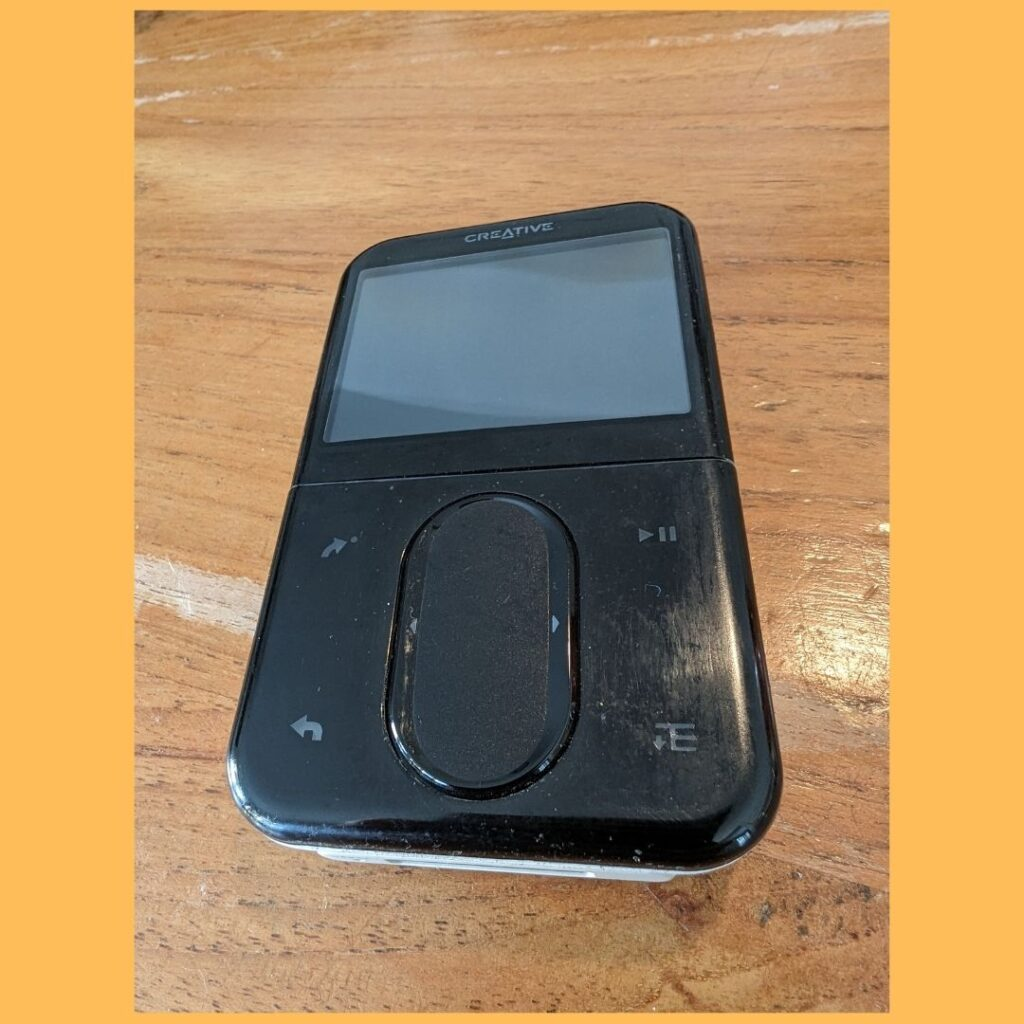

“As part of the work I do at KHK c:/ore, as well as extending beyond that, I collect empirical data. In my case, that data consists of records of interviews with scientists and others. Those records can be notes, but they can also be integral recordings of the conversations.

Relying on technology for the production of data is what scientists do on a daily basis. With that comes a healthy level of paranoia around that technology. Calibrating measurement instruments, measurement triangulation, and comparisons to earlier and future records all help us to alleviate that paranoia. I am not immune and my coping mechanism has been, for many years, to take a spare recording device with me.

This is that spare, my backup, and thereby the materialisation of how to deal with moderate levels of technological paranoia. It is not actually a formal voice recorder, but an old digital music player I have had for 15 years, the Creative Zen Vision M. It has an excellent microphone, abundant storage capacity (30 gigabytes) and, quite importantly, no remote access options. That last part is quite important to me, because it ensures that the recording cannot enter the ‘cloud’ and be accessed by anyone but me. Technologically, it is outdated. It no longer serves its original purpose: I never listen to music on it. Instead, it has donned a new mantle as a research tool.”

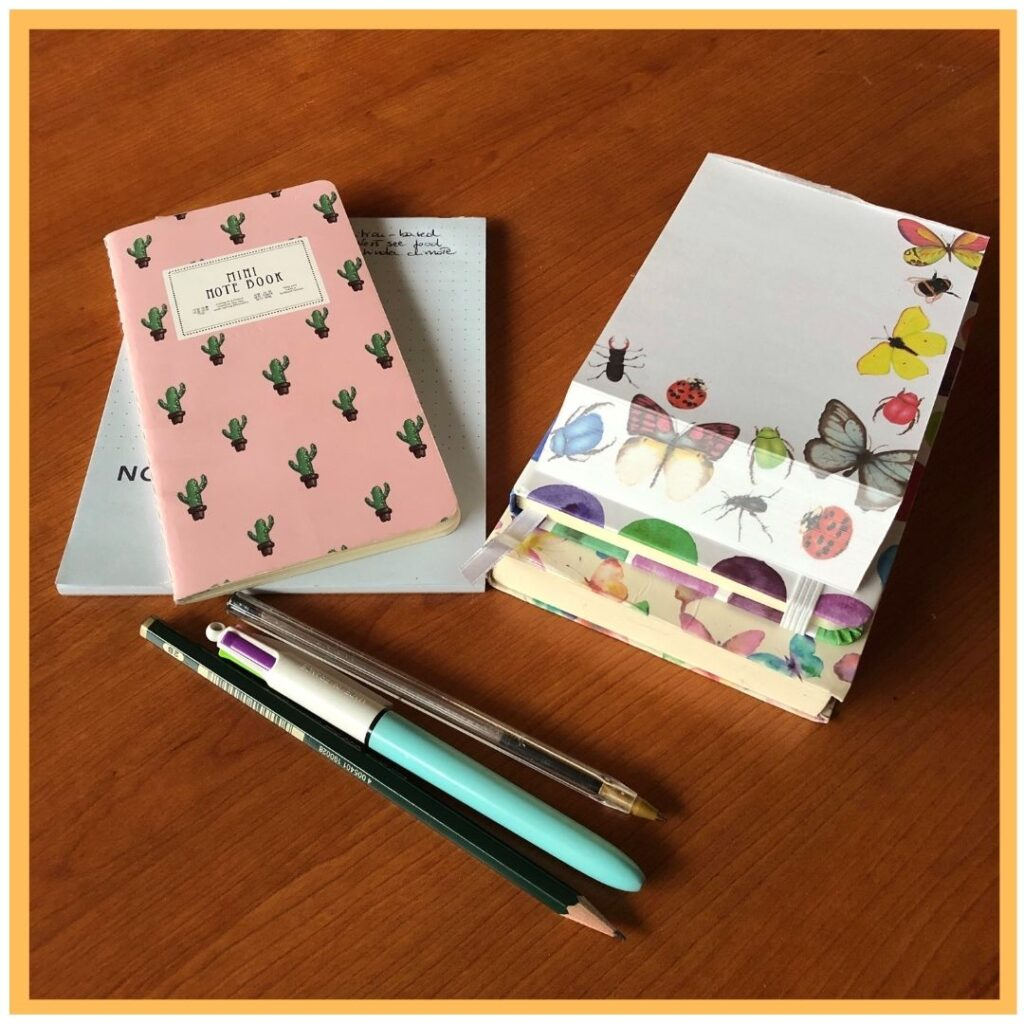

“When asked about the fundamental object for my research practice, I immediately thought of my computer, which seemed the obvious answer given that I read, study, and write on it most of the time.

Upon further reflection, however, I realized that on my computer, I just manage the initial and final phases of my research, namely gathering information and studying on the one hand, and writing papers on the other.

Yet, between these two phases, there is a crucial intermediate step that truly embodied the essence of research, for me: the reworking, systematization, organization, and re-elaboration of what I have read and studied, as well as the formulation of new ideas and hypothesis. These processes never occur on the computer but always on paper.

Therefore, the essential objects for my research are notebooks, sticky notes, notepads, pens, and pencils.”

“As I research Hegel’s logic and how he understands life as a logical category necessary to make nature intelligible, I work closely with his texts. On the other hand, the stickers on my laptop remind me of the need to look at reality and regularly question the relevance of my research for understanding current social phenomena. In this sense, I think I remain a Hegelian, because for Hegel one can only fully understand an object of research by looking at both its logical concept and how it appears in reality. However, I think that in order to look at current political and social phenomena, we need to go beyond Hegel’s racist and sexist ideas, which are all around his ideas on social organization. And none of this would be possible without a good cup of coffee and/or a club mate!”

“The 3D replica of my teeth that stands on my desk reminds me of two important things. First, a model is what we make of it. The epistemic value of modelling lies in interpretation, which depends on but is not defined by representation. I make something very different of (a replica of) teeth than a dentist and an archaeologist do.

Secondly, and not any less important, this replica reminds me to smile, and I hope that it might inspire colleagues to smile, too, when they see it on my desk.

To tell a smile from a veil, as Pink Floyd ask us to, we need to know that a smile is infinitely more important than scientific modelling. If scientific modelling does not lead to smiling, it is of no value. A smile is a good metonymy to be reminded by.”

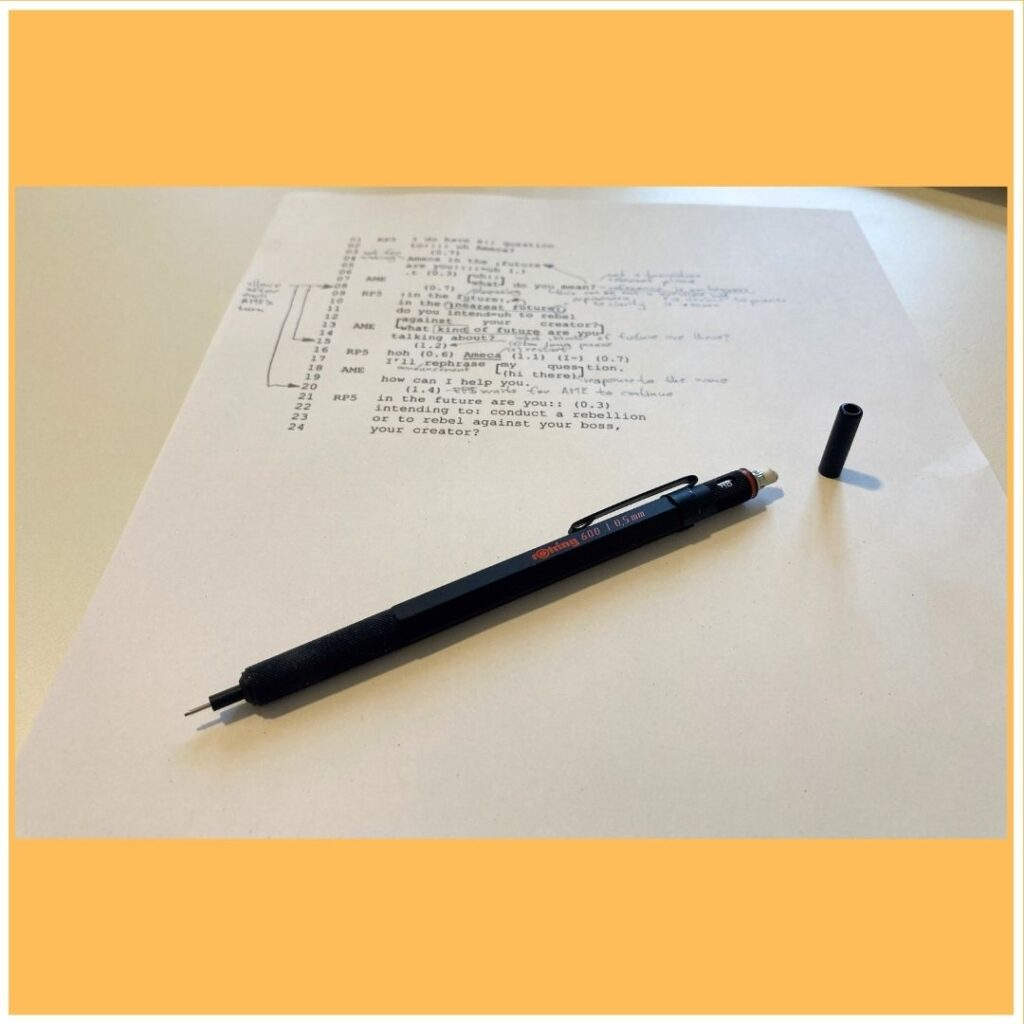

“There is a joke about which faculty is cheaper for the university. Mathematics is very cheap because all they need is just pencils and erasers. But philosophy is even cheaper because they don’t even need erasers.

My favorite and indispensable object is the rOtring 600 mechanical pencil. It shows that social science is closer to mathematics than to philosophy. Of course, social scientists often need more than pencil and eraser: they have to collect and process data from the real world. But this processing is greatly facilitated by the ability to write and erase your observations.

In my work, I deal with the transcripts of human-machine communication, and I use the rOtring 600, which has a built-in eraser, a lot. It’s useful not only because of the eraser, but also because it’s designed to stay on the table and not break, even in very demanding circumstances like the train journey. And it gives me the feeling that I am making something tangible with it, because it reminds me of engineers or designers producing blueprints for objects and machines.”

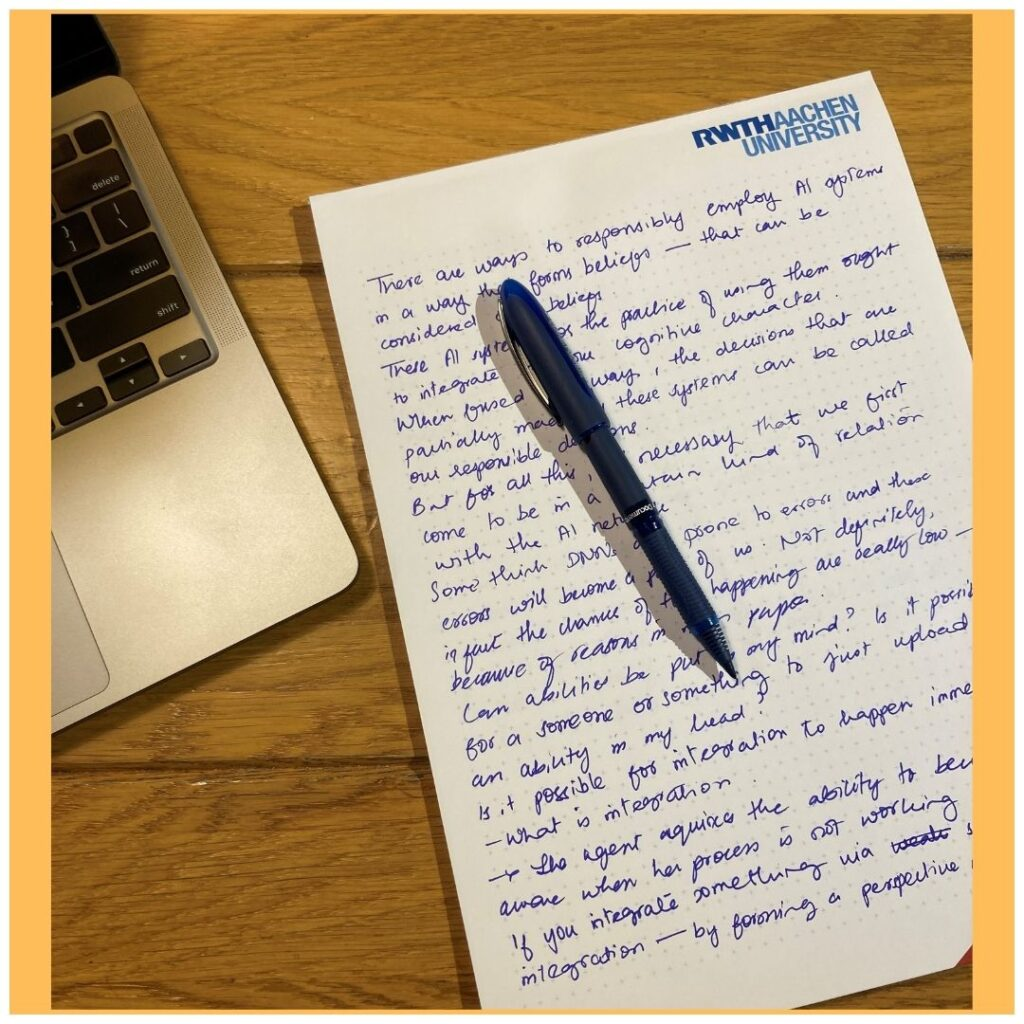

“A pen and a notebook are essential for my research. They help me think. It’s not at all about the words I write. I rarely read them again. Scribbling is just an act that helps me stack ideas on top of each other and do all the complicated thinking and connection-building.

I also turn to scribbling in my notebook when I am stuck in the writing process. There is often a time after the first rough draft of the paper when some ideas stop flowing smoothly or don’t fit very well with the main argument. I turn to the notebook and start writing the main ideas, deliberating how they support each other.

This is all especially interesting since a lot of my research is about extended cognition, which is the idea that we sometimes employ external resources such that part of our thinking happens outside our body (in these resources).”

“Spending a few weeks in Argentina, in front of my desk, a Post Office building. A nice futuristic architectural concept, degraded by its construction materials, support of a communication antenna, appropriated by pigeons as a dovecote: a hybrid object.”

“By saying that I study ‘artful intelligence’, which I mean only as a half joke, I take seriously the propositions to my career as a media scholar that…

1. As the first image suggests, human artfulness can be found all around, such as this snapshot of a wall on a side street not far from the Cultures of Research at the RWTH.

2. Sometimes architectural masterpieces that represent more than the sharp angles of twentieth-century modernism are all about us, such as this bus stop on the way to Cultures of Research in Aachen. Any study of science and technology has to ask, what does it mean? Sources do not speak for themselves.

3. Sometimes artificial intelligence is best found in letting people be people, such as a doodle here in a sketchbook. Straight lines do not always precipitate straightness.

4. I study how science, technology, and artificial intelligence has been understood in different times and places, such as this remote-controlled robot that failed in the immediate aftermath of the Chernobyl explosion in 1986 in Soviet Ukraine, which helps unstiffen, enliven, and sober our imagination of what may already be the case today and could be the case tomorrow.”

Thank you for joining us on this journey. We look forward to share more insights and stories with you!

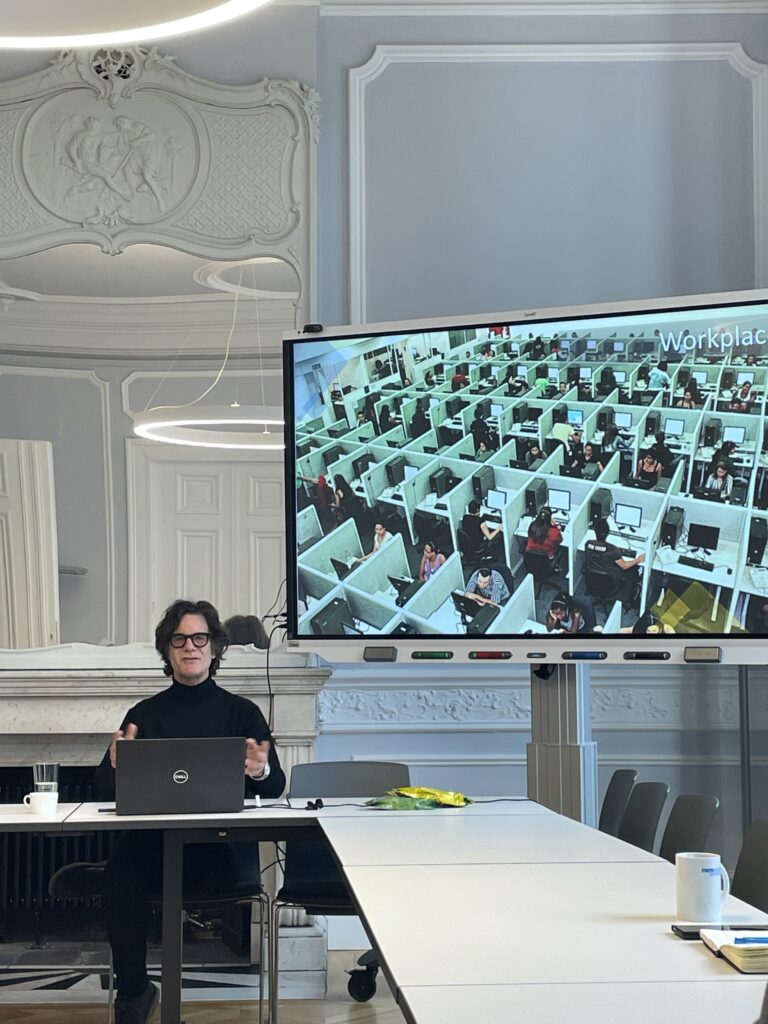

“Humans haven’t necessarily made the best choices for our world.” – Interview with Peter Mantello on Emotionalized Artificial Intelligence

In February 2024, a collaboration with colleagues from Ritsumeikan Asia Pacific University on the topic of Emotionalized Artificial Intelligence (EAI) started. Professor Peter Mantello (Ritsumeikan Asia Pacific University) leads a 3-year project funded by the Japan Society for the Promotion of Science, on which c:o/re is a partner, that will compare attitudes in Japan and in Germany on EAI in the workspace. This explorative pathway contributes to the c:o/re outlook on Varieties of Science. You can find the whole project description on our website here.

In the interview below, Peter Mantello explains what EAI is, how the project will consider AI ethics and why the comparison of German and Japanese workplaces is particularly insightful. We thank him for this interview and look forward to working together.

Peter Mantello

c:o/re short-term

Senior Fellow (11-17/2/2024)

Peter Mantello is an artist, filmmaker and Professor of Media Studies at Ritsumeikan Asia Pacific University in Japan. Since 2010, he has been a principal investigator on various research projects examining the intersection between emerging media technologies, social media artifacts, artificially intelligent agents, hyperconsumerism and conflict.

What is Emotionalized Artificial Intelligence (EAI)? What does this formulation entail differently than ‘Emotional’ AI?

Emotional AI is the commercial moniker of a sub-branch in computer science known as affective computing. The technology is designed to read, monitor, and evaluate a person’s subjective state. It does this by measuring heart rate, respiration rate, skin perspiration levels, blood pressure, eye movement, facial micro-expressions, gait, and word choice. It involves a range of hardware and software. This includes cameras, biometric sensors and actuators, big data, large language models, natural language processing, voice tone analytics, machine learning, and neural networks. Emotionalized AI appears in two distinct forms: embodied (care/nursing robots, smart toys) and disembodied (chatbots, smartphone apps, wearables, and algorithmically coded spaces).

I think the term ’emotionalized’ AI better encompasses the ability of AI not to just read, and recognize human emotion but also to simulate and respond in an empathic manner. Examples of this can be found in therapy robots, chatbots, smart toys, and holograms. EAI in allows these forms of AI to communicate in a human-like manner.

What is emotionalized AI used for and for what is it further developed?

Currently, emotionalized AI can be found in automobiles, smart toys, healthcare (therapy robots/ doctor-patients conversational AI) automated management systems in the workplace, advertising billboards, kiosks and menus, home assistants, social media platforms, security systems, wellness apps and videogames.

What forms of ethical work practices and governance do you have in mind? Are there concrete examples?

There are a range of moral and ethical issues that encompass AI. Many of these are similar to conventional usages of AI, such as concerns about data collection, data management, data ownership, algorithmic bias, privacy, agency, and autonomy. But what is specific about emotionalized AI is that the technology pierces through the corporeal exterior of a person into the private and intimate recesses of their subjective state. Moreover, because the technology targets non-conscious data extracted from a person’s body, they may not be aware or consent to the monitoring.

Where do you see the importance of cultural diversity in AI ethics?

Well, it raises important issues confronting the technology’s legitimacy. First, the emotionalized AI industry is predominantly based in the West, yet the products are exported to many world regions. Not only are the data sets used to train the algorithms limited to primarily Westerners, but they also rely largely on famed American sociologist Paul Eckman’s ‘universality of emotions theory’ that suggests there are six basic emotions and are expressed in the same manner by all cultures. This is untrue. But thanks to a growing number of critics who have challenged the reliability/credibility of face analytics, Eckman’s theory has been discredited. However, this has not stopped many companies from designing their technologies on Eckman’s debunked templates. Second, empathetic surveillance in certain institutional settings (school, office, factory) could lead to emotional policing, where to be ‘normal’ or ‘productive’ will require people to be always ‘authentic’, ‘positive’, and ‘happy’. I’m thinking of possible dystopian Black Mirror scenarios, like in the episode known as “Nosedive”.

Third, exactly what kind of values do we want AI to have – Confucian, Buddhist, Western Liberal?

Do you expect to find significant differences between the Japanese and German workplace?

Well, it’s important to understand the multiple definitions of the workplace. Workplaces include commercial vehicles, ridesharing, remote workspaces, hospitals, restaurants, and public spaces, not just brick-and-mortar white-collar offices.

Japan and Germany share common work culture features, but each society also has historically different attitudes to human resource management relationships, what constitutes a ‘good’ worker, loyalty, corporate responsibility to workers, worker rights and unions, and precarity. The two cultures also differ in how they express their emotions, raising questions about the imposition of US and European emotion analytics in the Japanese context.

How will the research proceed?

The first stage of the research will be to map the ecology of emotion analytics companies in the West and East. This includes visits to trade show exhibits, technology fairs, start-up meetings, etc. The second stage will be interviews. The third stage will include a series of design fiction workshops targeted to key stakeholders. Throughout all of these stages, we will be holding workshops in Germany and Tokyo, inviting a interdisciplinary mix of scholars, practitioners, civil liberties advocates and industry people.

What do you think will be the most important impact of this project?

We are at a critical junction point in defining and deciding how we want to live with artificial intelligence. Certainly, everyone talks about human-centric AI but I don’t know what that means. Or if that’s the best way forward. Humans haven’t necessarily made the best choices for our world. If we try to make AI in our own image, it might not turn out right. What I hope this project brings are philosophical insights that will better inform the values we need to encode into AI, so it serves the best interests of everyone, especially, those who will be most vulnerable to its influence.

What inspired you to collaborate with c:o/re?

My inspiration to collaborate with c:o/re stems from my growing interest in phenomenological aspects of human-machine relations. For the past three years, my research has focused primarily on empirical studies of AI. The insights gained from this were very satisfying, albeit they also opened the door to larger, more complex questions that could only be examined from a more theoretical and philosophical perspective. After a chance meeting with Alin Olteanu at a semiotic conference, I was invited to attend a c:o/re workshop on software in 2023. I realized then that KHK’s interdisciplinary and international environment would be a perfect place for an international collaborative research project.

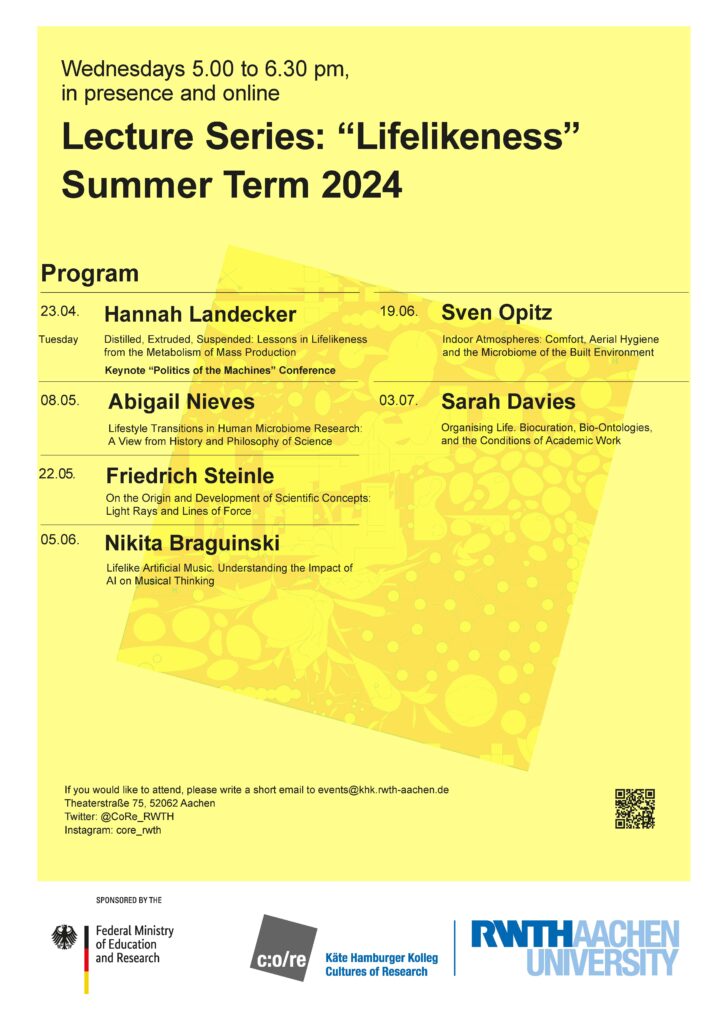

Lecture Series Summer 2024: Lifelikeness

Due to the great interest, the lecture series of the summer semester 2024 will once again be held on the topic of “Lifelikeness”.

Various speakers, including the sociologist Hannah Landecker (University of California, Los Angeles) and the historian of science Friedrich Steinle (TU Berlin), will be guests at the KHK c:o/re and shed light on “Lifelikeness” from different disciplinary perspectives.

Please find an overview of the dates and speakers in the program.

The lectures will take place from May 8 to July 3, 2024 every second Wednesday from 5 to 6.30 pm in presence and online.

An exception is the lecture by Hannah Landecker, which she will give as part of the interdisciplinary conference “Politics of the Machines” on Tuesday, April 23, 2024 from 5:30 to 7 p.m. in the Super C- Generali Saal.

If you would like to attend the lectures, please send a short email to events@khk.rwth-aachen.de.

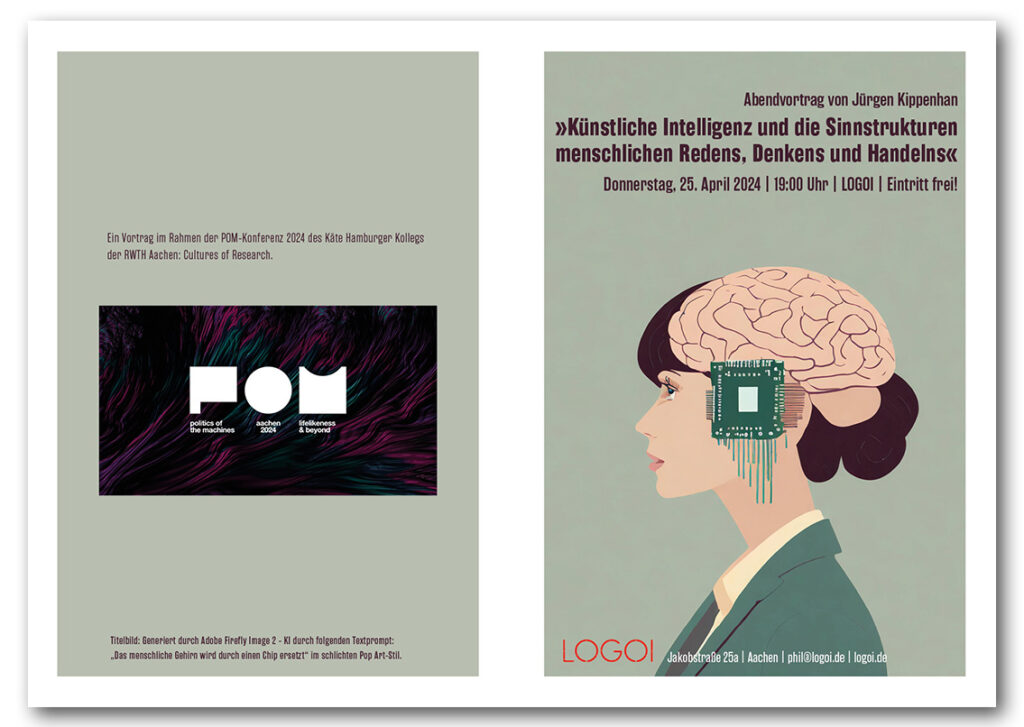

Program: PoM Conference in Aachen

Programmable biosensors, life-like robotics and other artificial models – the present and the future are dominated by new phenomena in the life sciences. How can the challenges, opportunities and uncertainties associated with these advances be addressed?

The transdisciplinary conference series “PoM – Politics of the Machines”, which will take place from April 22 to 25, 2024 at the Super C at RWTH Aachen University (Templergraben 57, 52062 Aachen) under the title “Lifelikeness & beyond”, will explore this question. At the interface of science and art, the conference aims to stimulate reflection on the comprehensive connections that shape our perception of the world.

International researchers and practitioners from various fields of science, technology and art will come together to discuss socio-cultural concepts of the future, the interaction between human and machine and ideas of the living and non-living in different formats.

The main program from 22 to 25 April will take place in Aachen in the Super C of the RWTH Aachen University and in the LOGOI Institute.

Super C: Templergraben 57, 52062 Aachen

LOGOI Institute: Jakobstraße 25a, 52064 Aachen

You can register with this form.

Further information on the schedule can be found in this program.

You can find a longer version with all abstracts in this program.

On Thursday, April 25, Dr. Jürgen Kippenhan will give a talk on “Artificial intelligence and the sensory structures of human speech, thought and action” as part of the “POM Conference” at LOGOI, Jakobstraße 25a, 52064 Aachen.

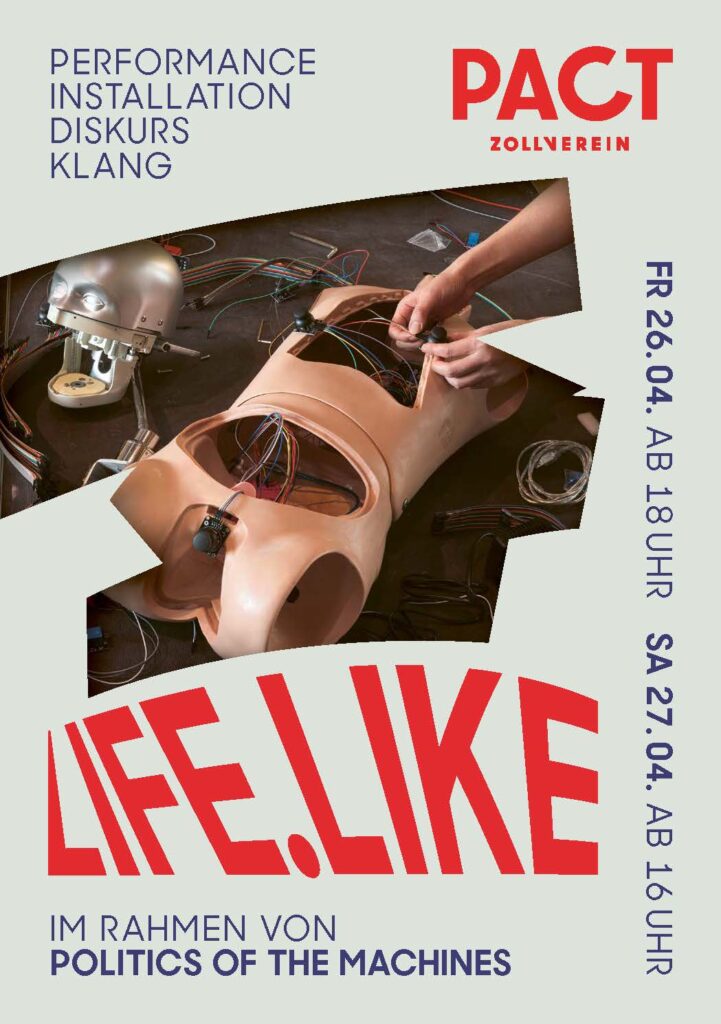

As part of the conference, the choreographic centre PACT Zollverein in Essen will realize the accompanying programme ‘life.like’ on 26 and 27 April 2024, which consists of six artistic positions in the form of performance, installation, discourse and sound.

‘Lifelikeness & beyond’ is the fourth edition of the “Politics of the Machines” conference series, founded by Laura Beloff (Aalto University Helsinki) and Morten Søndergaard (Aalborg University Denmark) and organized in collaboration with RWTH Aachen University, LOGOI Institute for Philosophy and Discourse and PACT Zollverein in Essen.

Theodore von Kármán Fellowship to Professor Reiner Grundman

Reiner Grundmann, Professor of Science and Technology Studies (STS) at the University of Nottingham, has been awarded the Theodore von Kármán Fellowship by RWTH Aachen University.

Professor Holger Hoos (Chair for Methodology of Artificial Intelligence), Professor Frank Piller (Chair of the Institute for Technology and Innovation Management) and KHK c:o/re Director Professor Stefan Böschen jointly applied for the fellowship. The fellowship thus strengthens interdisciplinary cooperation in the field of artificial intelligence (AI).

The fellowship enables Reiner Grundmann to spend seven weeks at the Käte Hamburger Kolleg: Cultures of Research (c:o/re) at RWTH Aachen University, where he will work on a project entitled “Communication Unbound: The Discourse of Artificial Intelligence” from April to May 2024. It investigates the discourse on forms of AI based on large language models and the challenges they pose to society. His current work focuses on the relation between knowledge and decision making, with a special interest in the role and nature of expertise in contemporary societies. To present the outcomes of this fellowship, Reiner Grundmann will give a public university lecture on May 15, 2024, 5-6.30 pm, at KHK c:o/re, Theaterstr. 75.

RWTH Kármán-Fellowships are funded by the Federal Ministry of Education and Research

(BMBF) and the Ministry of Culture and Science of the German State of North Rhine

Westphalia (MKW) under the Excellence Strategy of the Federal Government and the

Länder.

How a well-crafted brain model has influenced research

HANS EKKEHARD PLESSER

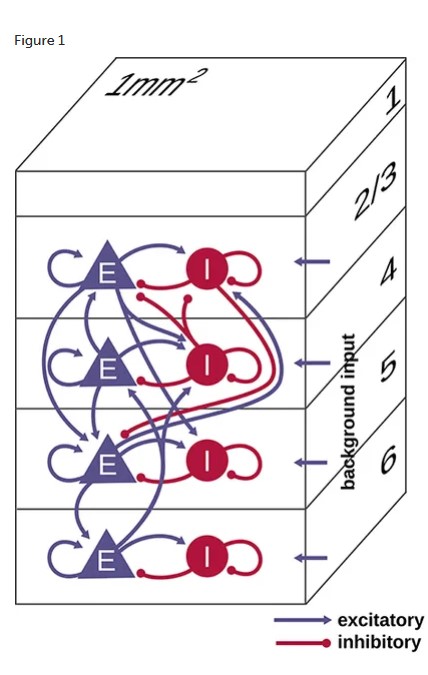

On the tenth anniversary of the publication of a model of a cortical microcircuit by Potjans and Diesmann (2014), 14 experts in computational neuroscience and neuromorphic computing will meet at the KHK c:o/re, at RWTH Aachen, to discuss their experiences in working with this model.

This event is a unique opportunity to gain insights on the effect that the Potjans-Diesmann model is having on computational neuroscience as a discipline. In light of the success of the model, the participants will reflect on why active model sharing and re-use is still not common practice in computational neuroscience.

Hans Ekkehard Plesser

Hans Ekkehard Plesser is an Associate Professor at the Norwegian University of Life Sciences.

His work focuses on simulation technology for large-scale neuronal network simulations and reproducibility of research in computational neuroscience.

Computational neuroscience, the field dedicated to understanding brain function through modelling, is dominated by small models of parts of the brain designed to explain the results of a small set of experiments, for example animal behavior in a particular task. Such ad hoc models often set aside much knowledge about details of connection structures in brain circuits. This limits the explanatory power of these models. Furthermore, these models are often implemented in low-level programming languages such as C++, Matlab, or Python and are shared as collections of source code files. This makes it difficult for other scientists to re-use these models, because they will need to inspect low-level code to verify what the code actually does. One might thus say that these models are formally, but not practically FAIR (findable, accessible, interoperable, reusable).

The model of the cortical microcircuit published by Potjans and Diesmann (2014) pioneered a new approach, quite different to previous practice. For good reasons, this new approach made the model remarkably popular in computational neuroscience. Based on a well-documented analysis of existing anatomical and physiological data, Potjans and Diesmann provided a bottom-up crafted model of the neuronal network found under one square millimeter of cortical surface. Their paper describes the literature, data analysis and data modeling on which the model is based, and provides a precise definition of the model.

In addition to their theoretical definition of the model, they created an implementation which is executable on the domain-specific high-level simulation tool NEST. They complemented this implementation with thorough documentation on how to work with the model. They even created an additional implementation of the model in the PyNN language, so that the model can be executed automatically on a wide range of neuronal simulation tools, including on neuromorphic hardware systems.

These efforts have led to a wide uptake of the model in the scientific community. Hundreds of scientific publications have cited the Potjans-Diesmann model. Several groups in computational neuroscience have integrated it in their own modelling efforts. The model has also had a key role in driving innovation in neuromorphic and GPU-based simulators by providing a scientifically relevant standard benchmark for correctness and performance of simulators.

References

Potjans, T. C., Diesmann, M. 2014. The cell-type specific cortical microcircuit: Relating structure and activity in a full-scale spiking network model. Cerebral Cortex 24(3): 785-806.

van Albada S. J., Rowley A. G., Senk, J., Hopkins, M., Schmidt, M., Stokes, A.B., Lester, D.R., Diesmann, M., and Furber S.B. 2018. Performance Comparison of the Digital Neuromorphic Hardware SpiNNaker and the Neural Network Simulation Software NEST for a Full-Scale Cortical Microcircuit Model. Frontiers in Neuroscience. 12:291.

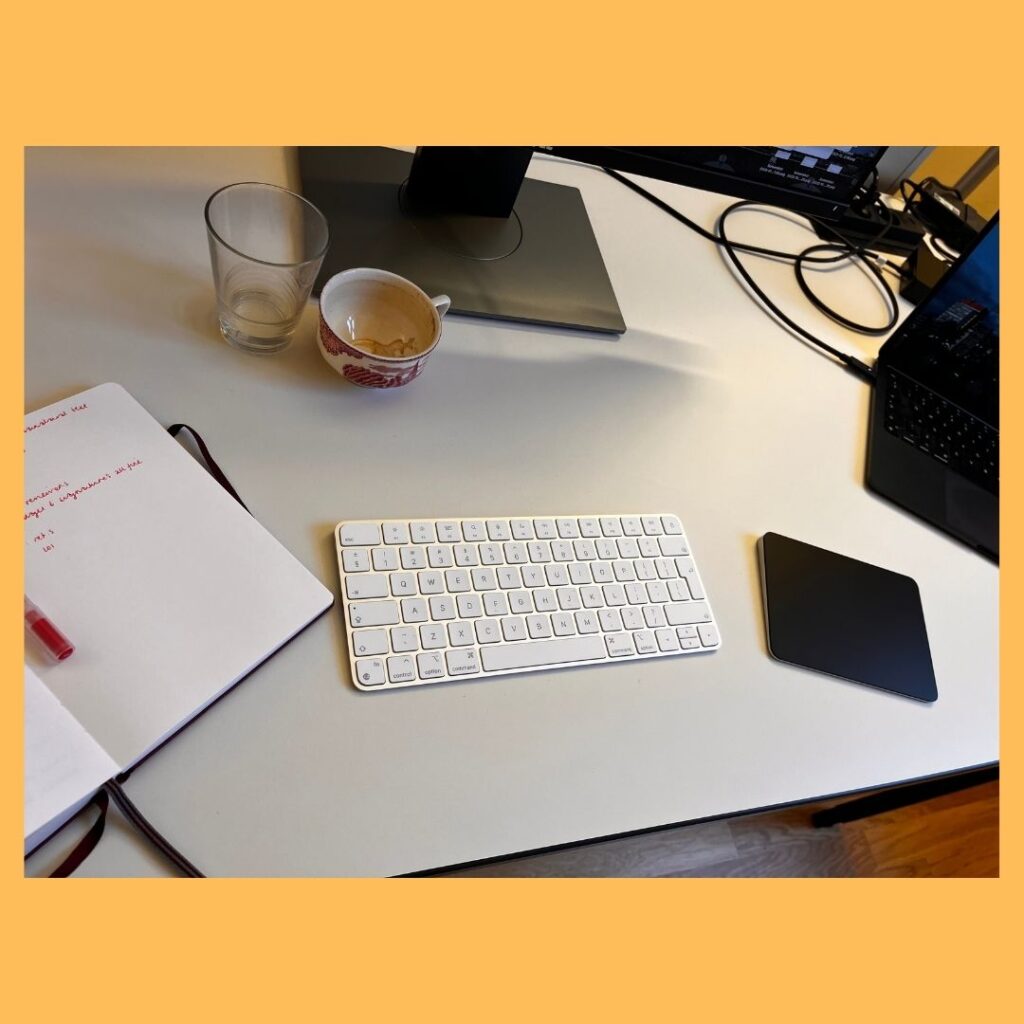

Objects of Research: Sarah R. Davies

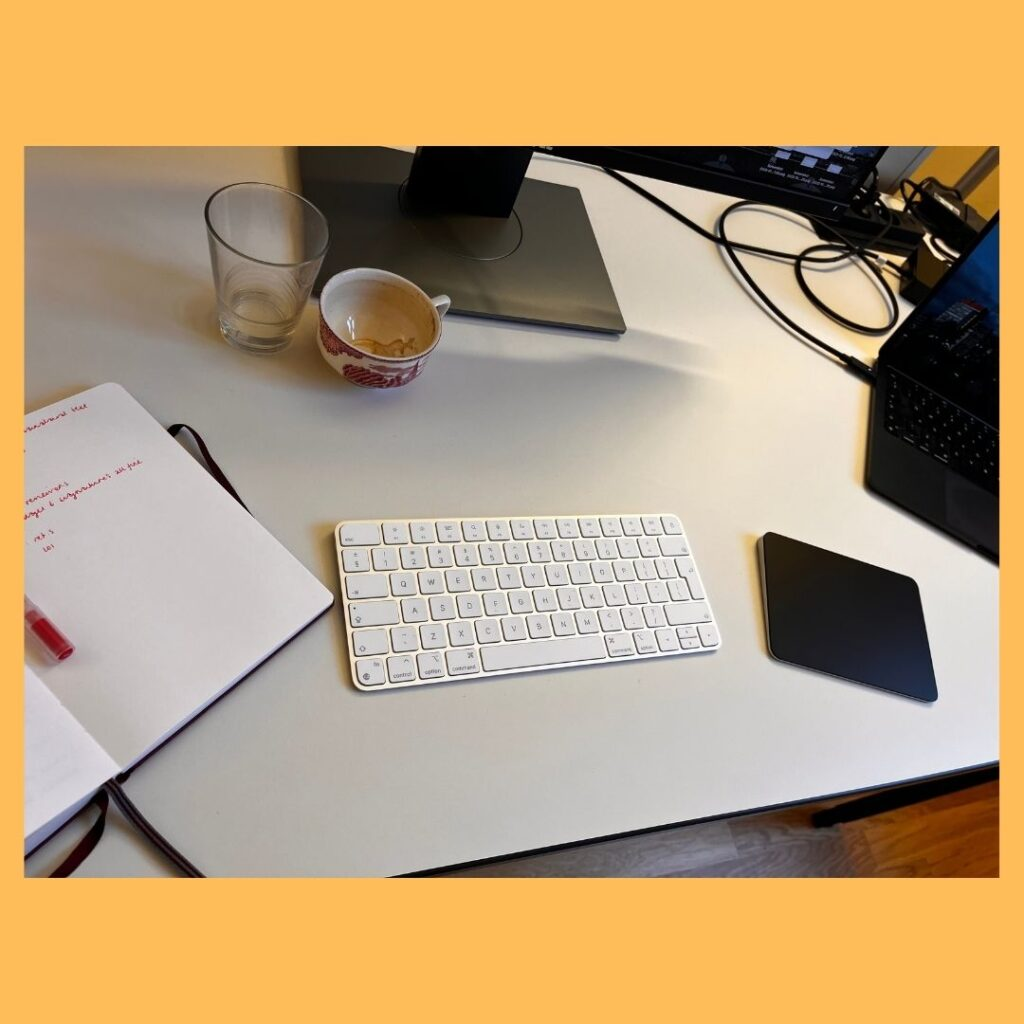

For today’s edition of the “Objects of Research” series, c:o/re Senior Fellow Sarah R. Davies gives an insight into her desk set up. As a professor of Technosciences, Materiality, and Digital Cultures, her work focuses on the intersections between science, technology, and society, with a particular focus on digital tools and spaces.

“I guess many academics would share some varient of this image: a careful arrangement of computer equipment, coffee, notepads, pens, and the other detritus that lives on (my) desk.

For me it’s important that the technical equipment is shown in conjunction with the paper notebook and pens. I’m fussy about all of these things – it’s distracting when my computer set-up isn’t what I’m used to, and I need to use very specific pens from a particular store – but ultimately my thinking lives in the interactions between them.

My colleagues and I are working on an autoethnographic study of knowledge production, and notice that (our) creative research work often emerges as we move notes and ideas from paper to computer (and back again).”

Would you like to find out more about our Objects of Research series at c:o/re? Then take a look at the pictures by Benjamin Peters, Andoni Ibarra, Hadeel Naeem, Alin Olteanu, Hans Ekkehard Plesser, Ana María Guzmán, Andrei Korbut, Erica Onnis, Phillip H. Roth, Bart Penders and Dawid Kasprowicz.