Categorizing is a central activity underlying digital technologies. Digital systems are based on acts of transformation from continuity and unity into discreteness and separation. These acts of transformation have been brought into everyday life in the multiple ways in which humans engage with AI systems and other forms of digital technologies. Everything we do is transformed, one could say, automatically into data. Therefore, categories have material effects. While they abstract, exclude, and simplify, they also produce relations, separate continuous processes and bring differences together.

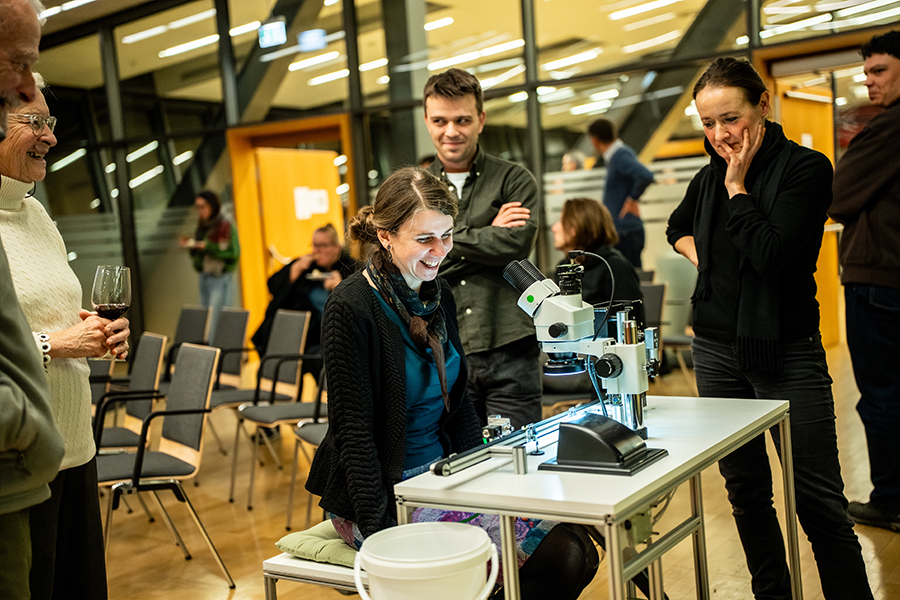

This topic of categorization in a digitalized world was the focus of the event The Ambiguity of Sorting, which took place on December 18, 2025, at the SuperC of RWTH Aachen University as part of the 8th edition of the international conference on History and Philosophy of Computing (HaPoC). Featuring works by the artists Sasha Bergstrom-Katz, Ren Loren Britton and Verena Friedrich, the aim was to explore how intelligence, the human being and life are categorized, and how we live together in the age of constantly operating sorting algorithms.

During the event, the material consequences of categorization labor were examined, including what kind of relations it produces and how to deal with the tension between the boundaries imposed by categories and the possibilities for creating productive relationships. Through installation, performance and conversations, Sasha Bergstrom-Katz, Ren Loren Britton and Verena Friedrich addressed questions such as: What kind of communities are enabled by digital technologies? Can we imagine a history of digital technology that is based on plural ways of understanding intelligence and the human? How can we design technologies of quantification and classification that bring people together?

The event kicked off with a video screening and performance of the project Unboxing Intelligence by Sasha Bergstrom-Katz. Two actors prompted the audience to participate in an intelligence test that highlighted the absurdity of the indicators and categories used to measure intelligence. The performance dealt with questions such as of how intelligence is constructed and what kind of social relations are produced in the activity of testing. Bergstrom-Katz’ project positions the intelligence test kit not merely as a scientific tool, but as a powerful actor in its own right and casts it at the center of a critical investigation into the construction and dissemination of intelligence. Composed of toys, games, puzzles, and instructional booklets, all organized into a handy kit, the test operates as a charismatic yet destructive object, one that has shaped and circulated ideas of measurable intelligence across the twentieth century and beyond. Approaching it through the lenses of artistic research, performance studies and material cultures, the project theorizes the test as a nesting doll of scientific ready-mades; it is a mass-produced and ideologically loaded object constituted of ready-made theories, objects, and performances.

How does artificial intelligence influence our understanding of human intelligence,

Sasha Bergstrom-Katz?

To be frank, I do not think artificial intelligence should influence or impact understandings of human intelligence. I’m not convinced of it as comparative to cognition or brain function. To start, my project, following others from the history and philosophy of the human sciences, queries just what is meant by the term “intelligence” and how this as a scientific object or concept is unstable and often defined more by the means of measuring or studying it than by its actual existence as a stable object. As such, just what we mean when we say “intelligence” is already unstable. It’s not too difficult to come up with definitions or processes of human intelligence via artificial intelligence, but then AI becomes the lens through which to see it and it takes for granted the fact that “intelligence” is a construct. Uljana Feest, for example, talks about these kinds of things as folk psychological concepts and this is similar to how Lorraine Daston talks about scientific objects. These concepts and objects, such as intelligence, are plucked from common usage and are brought into scientific spheres, yet they are really only defined by how we measure them (temperature by the thermometer, intelligence by tests).

I’m not actually particularly interested in so-called intelligence, but instead how ideas of intelligence shape relations. As such, I am also most interested in observing how AI does this, even though it’s really not a planned subject of research. It has already endangered wages, had negative environmental impacts, and has misconstrued and misdelivered information to millions of people through various interfaces such as ChatGPT. There is potential, I agree, for it to be narrowly useful in medical research or for making some things like language translation (however imperfect) more accessible and more accurate, yet at what cost?

Returning to your question, again, I do not study this, but I have observed an uptick in interests in human intelligence (from the dog whistle uses of IQ in right wing politics, to advances in gene editing, and, as you mention, a comparison between AI and IQ). I wonder to what extent all of these things are linked.

In the following talk, Verena Friedrich presented her project ERBSENZÄHLER[1] (literally translated pea counter), consisting of a series of industrial-looking sorting machines like the EZ Quality Sorter V2 for assessing the quality of peas. The project highlights the uneasiness inherent in the sorting process, as well as the contingency that this task entails. What makes a pea a good or a bad one? This question already shows that the categories underlying machines can be absurd and that they force us to think in a binary way to capture the variety of life into fixed categories. The project explores the increasing quantification and datafication of life through mathematical-technical procedures and systems – from counting and sorting to statistics and computer-aided processes – and the worldview that accompanies them. It consists of a series of industrial-looking sorting machines in which pea seeds are analyzed, classified, and sorted according to various parameters: from simple, quantifiable features such as weight to more complex ones like color or quality. Each station engages with different aspects of the quantification of life and the history of automation. In her presentation, Friedrich used the ERBSENZÄHLER project as an aesthetic and conceptual lens to reflect on broader questions and make these more tangible: What shifts occur when fundamental human activities like counting, classifying, and sorting are increasingly delegated to machines and “intelligent” systems? In this context, she showed her particular interest in the tension that arises when algorithmic decision-making replaces subjective, situated, and ambiguous processes of human judgment.

What do you think gets lost when human judgment is replaced by algorithms,

Verena Friedrich?

In regard to my work EZ Quality Sorter V2, what gets lost when human judgment is replaced by algorithms is the necessity of a material and situated engagement with a complex real-world situation.

Instead of reflecting on a case within our own frame of experience, we leave decisions to so-called “intelligent” systems. While these have been trained on the basis of human decisions, they ultimately operate through condensed, pattern-based logics that often remain opaque. The technical system becomes an entity with its own agency, which is no longer perceived as human-made or accountable in familiar ways. Similar to large bureaucratic or technical infrastructures, it becomes unclear who is responsible when a decision later turns out to be wrong or harmful.

Delegating decisions to machines removes the need to spend time with complexity, to slow down, and to confront ambiguity. This may free us from the burden of uncertainty and responsibility, but it also diminishes our agency to shape the world we live in, and the value systems that make that world worth living.

Ren Loren Britton’s installation and conversation invited the audience to experience technologies developed by the disability justice movement, in which balloons become a technology for the sound experience of films for people with hearing disabilities. The event attendees could experience the change in embodied experience and categoric shift for themselves by holding large, helium-filled balloons, which allowed a change of perception and perspective. Britton addressed questions such as what kind of technologies are recognized as innovation, and which ones address different bodily needs, but remain hidden. They discussed the different forms in which disabled bodies are represented and the possibilities for giving them agency back. In their project Indexing Tech for Disability Justice: blocks, boards, plates, shafts & volumes, Britton negotiates the affordances of naming affinity and sites of embodiminded difference towards an index of technological hir – his – her – story. An Index that would serve an intersectional disability justice tech movement. Moving against a techno- racial- capitalist narrative assuming that people are only users – to be impacted upon and extracted from – this installation works with a series of historical intersectional crip, trans*, BIPoC innovations that shift the terms of what we think of when we think of technology.

How can technology help foster a sense of community and provide more options for categorization,

Ren Loren Britton?

Technology when understood as socio technical systems – and categorization, when taken as ways of naming and organizing for strategic means – can draw communities to us, or can find us in the midst of community practices that have always already been technological – but which may not have been recognized as such – yet. In my work with the aesthetics of access – making with plural sensory worldings – technologies become tools to sense the world through our bodyminds.

Categories become operations of finding those we share affinities, politics and experiences with and pluralizing options for connection. Technologies foster community through providing more options for accessing, relating and facilitating operations of love.

In a concluding panel discussion, the artists explored together how technology is being narrated, what is included in that field, and how what is recognized as a technology or innovation depends already on given assumptions of who the agents of its development are. The event demonstrated the ambiguous power of categories. Categories can act as a tool of oppression when forced to reduce what is diverse and continuous into discrete and enclosed units. But they can also serve as tool for justice, as when indexing disability helps to address the needs of a diversity of bodies.

[1] In German, a nitpicker is commonly understood to be a person who is pedantic, petty, and overly precise in adhering to details and rules.

Photos by Christian van’t Hoen