Is Artificial Intelligence (AI) capable of modeling and replicating human sensory associations? This question was explored at the KHK c:o/re on April 28, 2025, as part of the science-art installation experiment “Melodic Pigments: Exploring New Synesthesia” created by the Japanese media artist Yasmin Vega (Tokyo University of the Arts) in cooperation with KHK c:o/re alumni fellow Masahiko Hara (Institute of Science Tokyo).

The goal of the installation experiment is to explore the relationship between sound and color through the phenomenon of synesthesia, in which one sensory perception involuntarily triggers another. The primary focus is on chromesthesia, a form of auditory synesthesia in which sounds evoke the perception of colors.

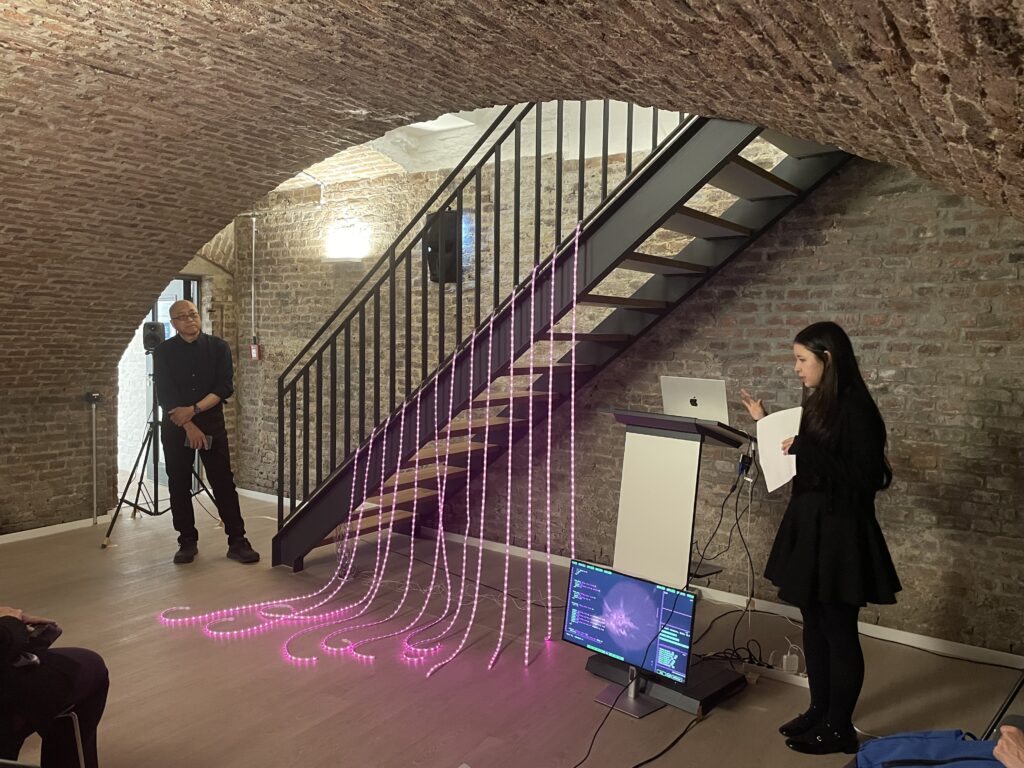

During the performance, Yasmin Vega played electronic music using live programming, while an AI model trained on sound-color associations predicted and visualized corresponding colors in real time. The AI model used in this project was trained on subjective data reflecting Yasmin Vega’s personal associations between sound and color. Throughout the performance, the AI processed incoming sounds every three seconds and determined the corresponding colors based on the pre-trained data, thereby creating a fluid interplay between auditory and visual elements. The colors chosen by the AI were shown on a series of hanging light tubes and as morphing pattern on a computer screen.

The installation experiment seeks to explore the AI’s ability to model and replicate human sensory associations. The focus is on exploring internal visualization processes and the sensory capabilities of machines. Yasmin Vega’s performance demonstrated that the AI was able to recognize and replicate human perceptions while generating color-sound associations that matched the artist’s expectations.

This installation experiment builds on previous works on the integration of artistic strategies into science and technology that KHK c:o/re alumni fellow Masahiko Hara has engaged in during his fellowship. In January 2024, he presented the art installation “Unfelt Threshold” developed in collaboration with the artist Aoi Suwa that explored the perceptual capabilities of machines in response to unpredictable visual stimuli. Through these experiments, Masahiko Hara aims to open up new perspectives at the intersection of materials science and nanotechnology within scientific engineering. The interplay between science and artistic practice reflects a central research interest of the KHK c:o/re, which investigates how artistic methods can contribute to epistemic questions within an expanded framework of science and technology through performances such as “Melodic Pigments”.

Interview with Yasmin Vega

How do you perceive sound and color?

When I listen to a high-pitched sound, I imagine yellow. When I listen to a dark sound, like dark bass, I also imagine a dark color, like dark green or like deep purple. The volume of the sound also changes how I perceive it. If I listen to a loud sound, I imagine red. And even if I listen to the same melody, if it’s played with different instruments, I also imagine a different color.

What surprised you most about the visualizations of the AI?

What surprised me the most was how often the AI’s visualization matched the color that I actually imagine when I’m performing. And it almost felt like the AI could understand my personal sense of color. From this whole performance, I realized that how useful it can be to work with AI when it comes to expressing something that’s really personal and hard to explain to others or share with others.

What do you think about AI and art working together? Where do you see challenges?

I wanted to use the AI just as a tool in my artworks. I felt that if I collected the training data by myself and developed the model by myself, then using AI is just like using the tool. So, the final artwork was really my work. And I didn’t feel like just writing the prompt and letting the AI to generate the image is really artwork. That’s why I originally wanted to control the AI as much as possible, but now I feel a little bit more relaxed about it. Now I’m looking for the unpredictable results that come when I can’t freely control the AI. I can say that I’m not so cautious anymore.

One challenge I see is the amount of the data. This time I only used 300 samples to train the AI model. There are so many sounds in the world, so it’s basically impossible to cover all of it. But improving the model’s accuracy doesn’t automatically mean that the artwork itself gets better. So, I think the creative value comes from something more than just how smart or how accurate the AI is.

Photos and video by Jana Hambitzer

3 Comments

Comments are closed.