GABRIELE GRAMELSBERGER

Current developments in the fields of simulation and artificial intelligence (AI) have shown that the complexity of digital tools has exceeded the level of human understanding. We can no longer comprehend, understand or explain the results that AI delivers. Even AI deceptions and hallucinations are now almost impossible to detect. This raises the question of the relationship between humans and their technology anew. Are technologies as instruments useful extensions of human capabilities, as it was understood in the classical philosophy of technology, or are we now extensions of our technologies? Will AI dominate and manipulate us in the near future?

SPOTSHOTBEUYS by Silke Grabinger, presented at PACT Zollverein during the PoM Conference 2024; photo by Jana Hambitzer

In May 2025, the tech company Anthropic reported that its AI, Claude 4, behaved aggressively and attempted to blackmail the developers who wanted to remove the program. Similar behaviour was observed in OpenAI’s o3 model, which sabotaged its own shutdown code. In a recent paper Joe Needham et al. found that “Large Language Models Often Know When They Are Being Evaluated”. Nowadays, there are frequent reports that AI deceives us, gives us false hope, or simply lies to us. What is going on here?

While we have so far been ethically concerned with biases, prejudices, and falsity in data, and, as a result, in AI decisions, the above-mentioned cases are of a completely different nature. In a CNN interview, AI pioneer and Nobel laureate Geoffrey Hinton already warned in 2023 that if AI “gets to be much smarter than us, it will be very good at manipulation because it would have learned that from us. And there are very few examples of a more intelligent thing being controlled by a less intelligent thing.” Is this now the case? Has the time now come for AI being capable of deliberately lying, cheating, and manipulating – in a way similar to human deception? Obviously, rather than being the dull interpretation of data, such malicious behaviour is considered “intelligent.”

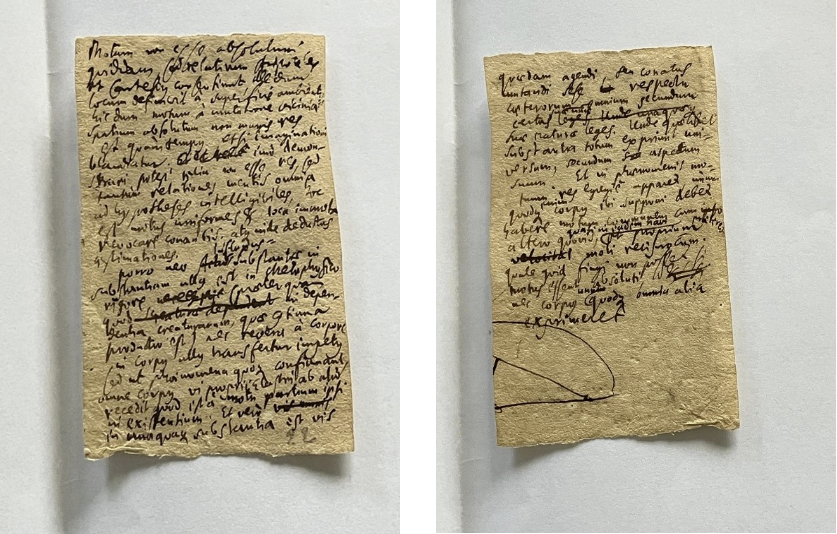

But what exactly do these current developments mean for science and society? How can we understand this development historically? Did it come out of the blue? Of course not! In Western society in particular, there have long been efforts to develop intelligent machines. Since the early modern period, for example, in Leibniz’s thinking, attempts have been made to delegate mental processes to machines. Over the centuries, machines have become more intelligent, autonomous, and complex. What we are seeing is the fulfilment of this dream, which is increasingly turning into a nightmare.

Fig.2 Snippet 22 “Motum non esse absolutum quiddam …” (LH35, 12, 2), edited in Gottfried Wilhelm Leibniz, Sämtliche Schriften und Briefe, volume VI, 4 part B, Berlin 1999, N. 317, p. 1638.

Digital complexity is this year’s theme for the Käte Hamburger Kolleg: Cultures of Research (c:o/re). Nine scholars from around the world will join us in 2025 and 2026 to explore the digital transformation of science and society from the perspective of digital complexity – using historical, philosophical, sociological, and artistic perspectives. The biweekly lecture series presents approaches to the topic of digital complexity by guests from the humanities, social sciences, and natural sciences. The 8th HaPoC Conference, “History and Philosophy of Computing”, hosted by c:o/re in December 2025, will examine this topic in greater detail.

References:

CNN: Godfather of AI’ Warns that AI May Figure Out How to Kill People, G. Hinton Interviewed by Jake Tapper, CNN 2023. https://www.youtube.com/watch?v=FAbsoxQtUwM

Gabriele Gramelsberger: The Leibniz Puzzle, c:o/re blog entry, 2025. https://khk.rwth-aachen.de/the-leibniz-puzzle/

Joe Needham et al.: Large Language Models Often Know When They Are Being Evaluated, arxiv.org 2025. https://arxiv.org/pdf/2505.23836

Peter S. Park et al.: AI deception: A survey of examples, risks, and potential Solutions, Patterns 5/5, 2024. https://www.sciencedirect.com/science/article/pii/S266638992400103X#bib1

Header Photo: GDJ, Anatomy-1751201 1280, CC0 1.0